Precision and Accuracy in 2025: A Strategic Guide to Pharmaceutical Method Validation and Verification

This article provides researchers, scientists, and drug development professionals with a comprehensive guide to navigating the evolving landscape of analytical method validation and verification in 2025.

Precision and Accuracy in 2025: A Strategic Guide to Pharmaceutical Method Validation and Verification

Abstract

This article provides researchers, scientists, and drug development professionals with a comprehensive guide to navigating the evolving landscape of analytical method validation and verification in 2025. It covers foundational principles grounded in ICH Q2(R2) and Q14, explores modern methodological applications including Quality-by-Design (QbD) and AI-driven analytics, addresses common troubleshooting and optimization challenges, and offers a clear comparative analysis of validation versus verification strategies. The content synthesizes current regulatory trends, technological innovations, and practical frameworks to ensure robust, compliant, and efficient analytical practices in pharmaceutical development.

The Pillars of Reliability: Understanding Accuracy, Precision, and Regulatory Foundations

In pharmaceutical development and quality control, analytical methods must be reliable, reproducible, and fit for their intended purpose. Method validation provides assurance that analytical procedures consistently produce reliable results, with Accuracy, Precision, Specificity, and Linearity representing fundamental performance characteristics required by global regulatory standards [1] [2]. These parameters form the foundation for ensuring the credibility of scientific data supporting drug identity, strength, quality, purity, and potency [3].

The International Council for Harmonisation (ICH) guideline Q2(R1) establishes a comprehensive framework for validating analytical procedures, with the United States Pharmacopeia (USP), Japanese Pharmacopoeia (JP), and European Union (EU) guidelines maintaining close alignment with these core principles [2]. This guide objectively compares these essential parameters based on established regulatory requirements and experimental approaches.

Core Parameter Definitions and Regulatory Context

Regulatory Framework Comparison

While harmonized in principle, minor differences exist in how regulatory bodies approach method validation:

Table 1: Regulatory Terminology Comparison

| Parameter | ICH Q2(R1) | USP <1225> | JP Chapter 17 | EU Ph. Eur. 5.15 |

|---|---|---|---|---|

| Intermediate Precision | Intermediate Precision | Ruggedness | Intermediate Precision | Intermediate Precision |

| System Suitability | Implied | Emphasized | Strong emphasis | Emphasized |

| Robustness | Included | Included | Strong emphasis | Strong emphasis |

All guidelines emphasize science and risk-based approaches, allowing flexibility based on method intent [2]. USP particularly focuses on compendial methods and system suitability testing, while JP and EU place greater emphasis on robustness [2].

Parameter Definitions

Accuracy: The closeness of agreement between test results obtained by the method and the true value or an accepted reference value [1] [4]. It measures systematic error and is typically reported as percentage recovery [4].

Precision: The closeness of agreement between a series of measurements obtained from multiple sampling of the same homogeneous sample under prescribed conditions [1] [5]. It measures random error and is considered at three levels: repeatability, intermediate precision, and reproducibility [4].

Specificity: The ability to assess unequivocally the analyte in the presence of components that may be expected to be present, such as impurities, degradation products, and matrix components [1] [2]. For identification tests, specificity ensures identity; for assay and impurity tests, it ensures separation from interfering substances [1].

Linearity: The ability of the method to obtain test results directly proportional to the concentration of analyte in the sample within a given range [1] [4]. It demonstrates the method's capacity to elicit proportional responses to concentration changes [5].

Experimental Protocols and Assessment Methodologies

Accuracy Assessment Protocols

Drug Substance Assays:

- Use analyte of known purity (reference material or well-characterized impurity)

- Compare experimental concentration against theoretical concentration

- Alternative approach: Compare results with orthogonal procedure using distinct measurement approach [4]

Drug Product Assays:

- Spike known quantity of analyte into synthetic matrix containing all components except analyte

- If difficult to recreate all components, spike known amounts into actual test sample

- Compare results between unspiked and spiked samples [4]

Impurity Quantitation:

- Spike samples with known amounts of impurities

- If impurity references unavailable, compare with independent procedure or use drug substance response factor [4]

Experimental Design: Assess using minimum 3 concentration points covering reportable range with 3 replicates each. Perform complete analytical procedure for every replicate [4].

Case Study - Accuracy in Practice: An HPLC investigation of cranberry anthocyanins demonstrated how accuracy depends on calibration approach. When cyanidin-3-glucoside served as calibrant for all compounds, accuracy varied significantly compared to using individual anthocyanin reference standards, highlighting the importance of appropriate reference materials [3].

Precision Evaluation Methods

Repeatability:

- Minimum 9 determinations (3 concentrations × 3 replicates) covering reportable range, OR

- Minimum 6 determinations at 100% test concentration [4]

- Express as standard deviation, relative standard deviation, and confidence interval [4]

Intermediate Precision:

- Assess variations within same laboratory

- Include different days, different analysts, different equipment, different environmental conditions [4]

- Objective: Verify method provides consistent results after development phase [4]

Reproducibility:

- Demonstrate precision between different laboratories

- Critical for pharmacopoeial method standardization [4]

Precision Acceptance Criteria: The Horwitz equation provides empirical guidance for acceptable precision: RSDr = 2C^-0.15 where C is concentration as mass fraction [5]. The modified Horwitz values for repeatability include:

Table 2: Horwitz-Based Precision Standards

| Analyte Percentage | Acceptable %RSD (Repeatability) |

|---|---|

| 100.00% | 1.34% |

| 50.00% | 1.49% |

| 20.00% | 1.71% |

| 10.00% | 1.90% |

| 5.00% | 2.10% |

| 1.00% | 2.68% |

| 0.25% | 3.30% |

Specificity Demonstration

For chromatographic methods, specificity is established by:

- Examining chromatographic blanks in expected analyte retention time window [5]

- Demonstrating baseline separation between analyte and potential interferents

- For assay methods, demonstrating no interference from placebo or matrix components

- For impurity methods, resolving all potential impurities and degradation products [1]

Linearity and Range Determination

Linearity Experimental Protocol:

- Prepare series of standard solutions at minimum 5 concentration levels

- Distribute concentrations appropriately across working range (typically 50-150% of expected range) [5]

- Analyze each concentration minimum twice [5]

- Plot concentration versus response

- Calculate regression line by method of least squares [4]

Range Establishment: The specific range depends on method application:

Table 3: Method-Specific Range Requirements

| Test Method | Acceptable Range |

|---|---|

| Drug Substance/Product Assay | 80-120% test concentration |

| Content Uniformity | 70-130% test concentration |

| Dissolution Testing | ±20% over specification range |

| Impurity Assays | Reporting level to 120% specification |

Acceptance Criteria: For linear regression, typically requires R² > 0.95, though non-linear methods may be validated with different statistical approaches [4].

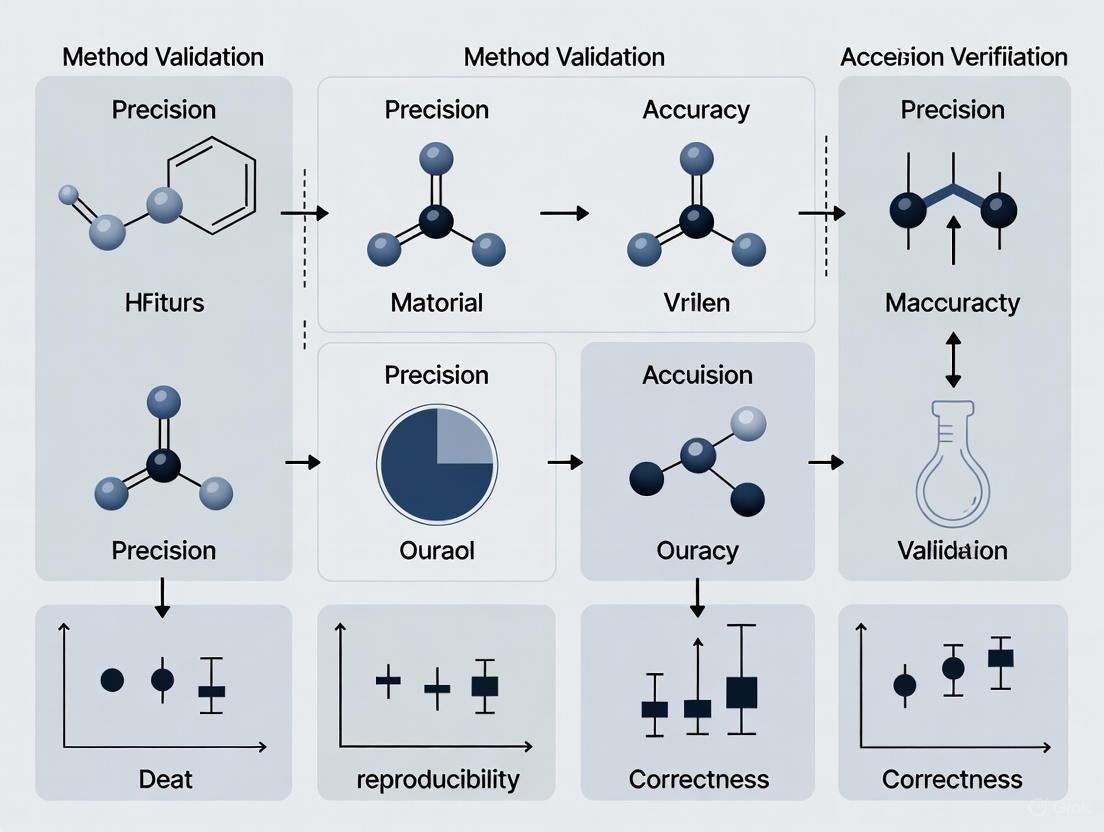

Method Validation Workflow and Relationships

The validation process follows a logical sequence where parameters build upon each other to establish method reliability.

Figure 1: Method Validation Parameter Workflow

Accuracy Assessment Methodologies

Different approaches to accuracy determination provide complementary verification of method reliability.

Figure 2: Accuracy Verification Approaches

Essential Research Reagent Solutions

Table 4: Key Reagents and Materials for Validation Studies

| Reagent/Material | Function in Validation | Critical Specifications |

|---|---|---|

| Reference Standards | Quantitation, identification, calibration curve establishment | Certified purity, stability, proper storage conditions [3] |

| Matrix Components | Placebo/excipient mixtures for specificity and accuracy | Represents final product composition, free of target analyte |

| Spiking Solutions | Known concentration solutions for recovery studies | Precise concentration, stability-matched with analyte [4] |

| Chromatographic Blanks | Specificity demonstration, interference assessment | Contains all components except target analyte [5] |

| System Suitability Standards | Verify chromatographic system performance | Resolution, tailing factor, precision, theoretical plates |

Detection and Quantitation Limit Determination

For methods requiring sensitivity assessment, Detection Limit (DL) and Quantitation Limit (QL) represent critical parameters:

Signal-to-Noise Approach:

- DL: Signal-to-noise ratio of 3:1 [4] [5]

- QL: Signal-to-noise ratio of 10:1 [4] [5]

- Suitable for methods with baseline noise measurement capability [4]

Standard Deviation and Slope Method:

- DL = (3.3 × σ)/S [5]

- QL = (10 × σ)/S [4] [5]

- Where σ = standard deviation of response, S = slope of calibration curve [5]

Visual Evaluation: Non-instrumental methods may use visual determination of minimal detectable or quantifiable levels [1].

Table 5: Core Parameter Acceptance Criteria Summary

| Parameter | Experimental Requirement | Typical Acceptance Criteria | Regulatory Reference |

|---|---|---|---|

| Accuracy | 3 concentrations, 3 replicates each | Recovery: 98-102% (drug substance), spiked recovery within specified range | ICH Q2(R1), USP <1225> [2] [4] |

| Precision (Repeatability) | 6 determinations at 100% or 9 across range | RSD ≤ 1-3% depending on concentration | Horwitz Equation [5] |

| Specificity | Chromatographic blanks, resolution mixtures | No interference at retention time, baseline separation | ICH Q2(R1) [1] [2] |

| Linearity | Minimum 5 concentration points | R² > 0.95 (or appropriate non-linear fit) | ICH Q2(R1) [4] |

| Range | Derived from linearity studies | Method-dependent (see Table 3) | ICH Q2(R1) [4] |

The core parameters of Accuracy, Precision, Specificity, and Linearity provide the foundational framework for demonstrating analytical method validity. While implementation details may vary slightly across regulatory jurisdictions, the fundamental requirements remain consistent globally. Through systematic experimental protocols and appropriate acceptance criteria, these parameters collectively ensure that analytical methods generate reliable, reproducible data suitable for regulatory decision-making in pharmaceutical development and quality control.

The International Council for Harmonisation (ICH) Q2(R2) and ICH Q14 guidelines represent a significant evolution in the regulatory approach to analytical procedures. Effective from 14 June 2024, these documents form a cohesive framework that transitions from a one-time validation exercise to an integrated Analytical Procedure Lifecycle Management (APLM) approach [6]. ICH Q2(R2) focuses on the "validation of analytical procedures," providing a framework for demonstrating that a method is fit for its intended purpose, while ICH Q14 outlines science and risk-based approaches for "analytical procedure development" [7] [8].

This harmonized guidance, adopted by both the U.S. Food and Drug Administration (FDA) and the European Medicines Agency (EMA), aims to improve regulatory communication and facilitate more efficient, science-based approval and post-approval change management [6]. For researchers and drug development professionals, understanding this integrated framework is crucial for developing robust, reliable methods that ensure product quality throughout their lifecycle.

Detailed Comparison: ICH Q2(R1) vs. ICH Q2(R2)

The revision from Q2(R1) to Q2(R2) introduces key changes to accommodate modern analytical technologies and align with the principles of ICH Q14.

Key Terminology and Structural Updates

The revised guideline incorporates several important conceptual shifts to address both chemical and biological analytical procedures [6]:

- Linearity is replaced by "Reportable Range" and "Working Range": The "Working Range" consists of "Suitability of calibration model" and "Lower Range Limit verification". This change better accommodates non-linear analytical procedures commonly used for biologics [6].

- Expanded Scope: ICH Q2(R2) now explicitly includes validation principles for analytical procedures using spectroscopic or spectrometry data (e.g., NIR, Raman, NMR, MS), which often require multivariate statistical analyses [6].

- Lifecycle Integration: The guideline explicitly allows for suitable data derived from development studies (per ICH Q14) to be used as part of the validation data, breaking down the traditional silos between development and validation [6].

Comparative Analysis of Validation Characteristics

The following table summarizes the core changes in validation requirements between ICH Q2(R1) and the new Q2(R2) framework:

Table 1: Comparison of Analytical Validation Requirements between ICH Q2(R1) and ICH Q2(R2)

| Validation Characteristic | ICH Q2(R1) Requirements | ICH Q2(R2) Updates | Impact on Method Validation |

|---|---|---|---|

| Linearity/Range | Defined as linearity across specified range | Replaced by "Reportable Range" & "Working Range"; includes calibration model suitability | Better accommodates non-linear methods for biologics |

| Scope of Application | Primarily chromatographic methods | Expanded to include multivariate methods (NIR, Raman, NMR, MS) | Supports modern analytical technologies |

| Development Data Utilization | Validation typically separate from development | Development data (ICH Q14) can be incorporated into validation | Reduces redundant testing; promotes knowledge-based approach |

| Platform Procedures | Not explicitly addressed | Reduced validation testing allowed for established platform methods | Increases efficiency for well-understood technologies |

| Lifecycle Approach | Implicit in quality by design (QbD) | Explicitly integrated with ICH Q14 for full procedure lifecycle | Encourages continuous improvement and knowledge management |

The Synergy between ICH Q14 and ICH Q2(R2)

ICH Q14, "Analytical Procedure Development," provides the scientific foundation that complements the validation principles in ICH Q2(R2). This guideline describes "science and risk-based approaches for developing and maintaining analytical procedures" suitable for assessing the quality of drug substances and products [8]. The enhanced approach in ICH Q14 facilitates improved communication between industry and regulators, providing a more structured framework for submitting analytical procedure development information [9].

Together, ICH Q2(R2) and ICH Q14 cover the development and validation activities used to assess product quality throughout the lifecycle of an analytical procedure, creating a seamless transition from initial development to ongoing monitoring and improvement [6]. This integrated approach is designed to support more flexible and efficient post-approval change management, potentially reducing regulatory submissions for minor changes [6].

FDA and EMA Implementation Timelines and Expectations

Both the FDA and EMA have adopted these guidelines, demonstrating global regulatory harmonization.

Adoption and Implementation Timeline

The FDA announced the availability of both ICH Q2(R2) and ICH Q14 guidelines in March 2024, confirming they were "prepared under the auspices of the International Council for Harmonisation" [7]. The EMA had previously published the new ICH Q14 as Step 5 in January 2024, with the effective date of 14 June 2024 [7]. This synchronized implementation underscores the commitment to global regulatory alignment.

Regulatory Emphasis and Focus Areas

While both agencies have adopted the same guidelines, their historical approaches to process validation provide context for their implementation focus:

- FDA's Approach: Traditionally emphasizes a three-stage model for process validation (Process Design, Process Qualification, and Continued Process Verification) with high emphasis on statistical process control [10].

- EMA's Approach: Outlined in Annex 15 of the EU GMP Guidelines, it maintains a lifecycle focus but with greater flexibility in ongoing verification approaches [10].

Despite these historical differences, the adoption of ICH Q2(R2) and Q14 represents a significant harmonization achievement. Both agencies now expect manufacturers to implement the science and risk-based approaches outlined in these guidelines, particularly for analytical procedures used in release and stability testing of commercial drug substances and products [6].

Practical Application: Experimental Protocols and Methodologies

Precision Evaluation Protocol

Precision validation remains a cornerstone of analytical method validation, with ICH Q2(R2) maintaining focus on this critical parameter. The Clinical and Laboratory Standards Institute (CLSI) EP05-A2 protocol provides a rigorous approach for determining method precision [11]:

- Experimental Design: The assessment should be performed on at least two levels (e.g., low and high concentrations), as precision can differ across the analytical range. Each level is run in duplicate, with two runs per day over 20 days, with each run separated by at least two hours [11].

- Controls and Conditions: Each run should include quality control samples (different from those used for routine instrument control) and at least ten patient samples to simulate actual operation. The order of analysis should be varied to account for potential sequence effects [11].

- Statistical Analysis: Data should be evaluated for outliers, and precision should be assessed at multiple levels:

- Repeatability (Within-run precision): Closeness of agreement between results under identical conditions over a short time [11].

- Intermediate Precision: Within-laboratory variations due to different days, analysts, or equipment [11].

- Reproducibility: Precision between different laboratories, typically assessed in collaborative studies [11].

The precision is measured as standard deviation (SD) or coefficient of variation (CV%), with the total within-laboratory precision calculated using analysis of variance (ANOVA) components [11].

Analytical Procedure Lifecycle Workflow

The integration of ICH Q14 and Q2(R2) creates a structured workflow for analytical procedures throughout their lifecycle, as illustrated in the following diagram:

Diagram 1: Analytical Procedure Lifecycle Management (APLM) Workflow

This lifecycle approach emphasizes that knowledge gained during routine monitoring and continuous improvement should feed back into procedure development, creating a knowledge management system that supports ongoing method optimization [6].

Essential Research Reagent Solutions for Method Validation

Successful implementation of ICH Q2(R2) and Q14 requires carefully selected reagents and materials. The following table outlines key solutions and their functions in analytical development and validation:

Table 2: Essential Research Reagent Solutions for Analytical Method Validation

| Reagent/Material | Function in Validation | Application Examples |

|---|---|---|

| Reference Standards | Provides accepted reference value for accuracy determination | Drug substance purity qualification; impurity quantification |

| System Suitability Solutions | Verifies chromatographic system performance before analysis | Resolution mixture for HPLC; sensitivity solution for detection limit |

| Quality Control Materials | Monitors assay performance during precision studies | Pooled patient samples; commercial quality control materials |

| Sample Preparation Reagents | Ensconsistent sample processing across validation parameters | Protein precipitation reagents; extraction solvents; derivatization agents |

| Chromatographic Mobile Phases | Maintains consistent separation conditions throughout validation | Buffer solutions; organic modifiers; ion-pairing reagents |

The integrated ICH Q2(R2) and Q14 framework represents a significant advancement in analytical science, moving the industry toward a more holistic, knowledge-driven approach to procedure development and validation. For researchers and drug development professionals, success in this new regulatory environment requires:

- Embracing the lifecycle approach to analytical procedures, from development through retirement

- Implementing science and risk-based principles in procedure development and validation

- Leveraging modern analytical technologies with appropriate validation approaches

- Maintaining comprehensive knowledge management systems to support continuous improvement

With both FDA and EMA adopting these harmonized guidelines, the pharmaceutical industry has an unprecedented opportunity to streamline global development and implement more robust, reliable analytical procedures that ultimately enhance product quality and patient safety.

In regulated research and drug development, the integrity of data is not merely a regulatory expectation but the very foundation upon which reliable scientific conclusions are built. The ALCOA+ framework provides a structured set of principles for ensuring data integrity throughout the data lifecycle. These principles are crucial for method validation, a process that confirms analytical procedures are suitable for their intended use. Without data governed by ALCOA+, the validation of a method's precision, accuracy, and reliability is fundamentally undermined [12] [13].

Originally introduced by the U.S. Food and Drug Administration (FDA) as ALCOA, the concept has been expanded to include additional criteria, forming ALCOA+ [14] [13]. This evolution reflects the growing complexity of data in the pharmaceutical industry and the need for more rigorous data governance. For researchers and scientists, adhering to ALCOA+ is not just about compliance; it is about ensuring that every data point generated during method validation and routine analysis is trustworthy, reproducible, and defensible [12] [15].

The ALCOA+ Principles: Definitions and Regulatory Context

The ALCOA+ acronym encompasses a set of nine core principles that define the attributes of high-integrity data. These principles are recognized globally by major regulatory agencies, including the FDA, the European Medicines Agency (EMA), and the World Health Organization (WHO) [13] [16]. The following table provides a detailed overview of each principle and its significance in a research and development context.

Table 1: The Core Principles of ALCOA+ and Their Application in Research

| Principle | Core Definition | Importance in Method Validation & Research |

|---|---|---|

| Attributable | Data must be traceable to the person or system that generated it, including the source, date, and time [14] [17]. | Ensures accountability for observations and actions during an analytical run, making data traceable to a specific researcher or automated system. |

| Legible | Data must be clear, readable, and permanent for the entire required retention period [14] [16]. | Prevents misinterpretation of critical values, such as sample concentrations or instrument readings, during data review and audit. |

| Contemporaneous | Data must be recorded at the time the activity is performed [14] [17]. | Ensures that observations reflect the true conditions of the experiment, minimizing the risk of errors from reconstructed or memory-based entries. |

| Original | The first or source record of data must be preserved, or a certified true copy must be available [14] [17]. | Protects the authentic record of an experiment, which is the definitive source for verification and review. |

| Accurate | Data must be correct, truthful, and free from errors, with any edits documented and justified [14] [12]. | Fundamental for establishing the precision and trueness of an analytical method during validation studies. |

| Complete | All data, including repeat tests, related metadata, and audit trails, must be present [14] [13]. | Provides the full context of the analytical process, ensuring no critical results are omitted and the dataset is robust for statistical analysis. |

| Consistent | Data should be recorded in a chronological sequence, with all changes documented and time-stamped [14] [16]. | Demonstrates a stable and controlled process over time, which is key for proving method robustness and reproducibility. |

| Enduring | Data must be recorded on durable media and preserved for the entire legally required retention period [14] [17]. | Guarantees that validation data remains available for regulatory inspection, product lifecycle management, and future scientific reference. |

| Available | Data must be readily accessible for review, audit, or inspection throughout its retention period [14] [16]. | Facilitates efficient regulatory submissions, laboratory audits, and the re-analysis of data for investigative purposes. |

The regulatory landscape for data integrity is increasingly harmonizing around these ALCOA+ principles. For instance, the FDA enforces them under CGMP regulations (e.g., 21 CFR Parts 211), while the EMA's Annex 11 explicitly references them for computerized systems [13] [16]. Recent trends show that a majority of FDA warning letters cite data integrity lapses, underscoring the critical non-compliance risks associated with failing to implement ALCOA+ effectively [13].

Experimental Protocols for Verifying Data Accuracy in Analytical Methods

A core objective of method validation is to verify the accuracy of the analytical procedure—how close the measured value is to the true value. The ALCOA principle of "Accurate" data is dependent on the statistical reliability of the measurements generated by the method itself [12]. The following experimental protocol outlines a standard approach for quantifying accuracy, often studied alongside precision.

Protocol for Assessing Method Accuracy and Precision

1. Objective: To determine the accuracy and precision of an analytical method for quantifying an analyte in a specific matrix.

2. Experimental Design:

- Sample Preparation: Prepare a minimum of five samples at three different concentration levels (e.g., 80%, 100%, and 120% of the target concentration) covering the method's range [12].

- Reference Standard: Use a certified reference standard of known purity and concentration to spike the sample matrix.

- Replication: Analyze each concentration level in multiple replicates (a minimum of three, preferably more) to allow for statistical evaluation of precision.

3. Data Collection: Following ALCOA+ principles:

- Attributable & Contemporaneous: Record raw data (e.g., chromatographic peak areas) directly into a laboratory notebook or a validated electronic system (LIMS), with user and timestamp data automatically captured [17] [16].

- Original & Legible: The instrument's original data file is the source record. Any printouts or exported data must be clear and permanently linked to the original file.

- Accurate: Perform system suitability tests (SST) before the analysis to ensure the instrument is functioning correctly [12].

4. Data Analysis:

- Accuracy (Trueness): Calculate the percentage recovery for each sample using the formula:

(Measured Concentration / Theoretical Concentration) * 100. Report the mean recovery and standard deviation for each concentration level [12]. - Precision:

- Repeatability: Calculate the relative standard deviation (RSD) of the replicates within the same day and by the same analyst (intra-day precision).

- Intermediate Precision: Assess the RSD of results generated on different days, by different analysts, or using different equipment (inter-day precision) to gauge the method's robustness [12].

5. Interpretation: The method is considered accurate if the mean recovery at each concentration level falls within a pre-defined acceptance criterion (e.g., 98-102%). Precision is typically acceptable if the RSD is below a threshold, such as 2% for assay methods. The combination of these two metrics provides a comprehensive view of the method's total error [12].

Workflow for Accuracy and Precision Assessment

The following diagram illustrates the logical workflow for planning, executing, and analyzing an experiment to assess the accuracy and precision of an analytical method, incorporating key ALCOA+ checkpoints.

Quantitative Comparison of Data Integrity Approaches

The implementation of ALCOA+ principles can be achieved through different methodologies, primarily paper-based systems, hybrid models, and fully electronic systems. The choice of approach significantly impacts efficiency, error rates, and compliance risk. The following table compares these approaches based on key performance metrics relevant to research and development environments.

Table 2: Performance Comparison of Data Integrity Implementation Approaches

| Implementation Characteristic | Paper-Based System | Hybrid System | Fully Electronic System (with Audit Trail) |

|---|---|---|---|

| Typical Data Entry Error Rate | Higher (Prone to manual transcription errors) [18] | Moderate (Mix of manual and electronic entry) | Lower (Automated data capture from instruments) [19] |

| Efficiency of Data Retrieval | Low (Manual searching of physical archives) | Moderate (Digital search possible for electronic portions) | High (Instant search and filtering across entire dataset) [17] |

| Cost of Regulatory Compliance | High (Manual review, physical storage) | Moderate to High (Management of two systems) | Lower (Automated audit trails, centralized storage) [19] |

| Strength of Audit Trail | Weak (Relies on paper corrections; easily compromised) | Partial (Electronic actions may be logged) | Strong (Comprehensive, immutable log of all actions) [17] [16] |

| Risk of Data Falsification | Higher (Difficult to prove contemporaneity) | Moderate | Lower (Attribution and timestamps are system-enforced) [13] |

| Support for ALCOA+ Principles | Manual, prone to lapses (e.g., legibility, contemporaneity) [17] | Inconsistent (Varies between paper and electronic) | System-enforced and inherent in design [14] [16] |

The Scientist's Toolkit: Essential Reagents and Solutions for Data Integrity

Beyond procedural protocols, ensuring data integrity requires the use of specific, high-quality materials and technical solutions. These tools form the foundation for generating reliable and accurate data in the first place.

Table 3: Essential Research Reagents and Solutions for Data Integrity

| Item | Function in Data Integrity |

|---|---|

| Certified Reference Standards | Provides a traceable and accurate benchmark for calibrating instruments and quantifying analytes, directly supporting the Accurate and Attributable principles [12]. |

| System Suitability Test (SST) Solutions | A standardized mixture used to verify that the entire analytical system (instrument, reagents, columns) is performing adequately before sample analysis, ensuring data Accuracy [12]. |

| Stable Isotope-Labeled Internal Standards | Added to samples to correct for analyte loss during preparation or matrix effects in mass spectrometry, improving the Accuracy and precision of quantitative results. |

| Validated Blank Matrices | Used in bioanalytical method development to prepare calibration standards, ensuring that the measurement is specific to the analyte and free from interference, supporting data Accuracy. |

| Audit Trail Software | A critical technical solution that automatically records all user actions, data creations, modifications, and deletions in a secure, time-stamped log, enforcing Attributable, Contemporaneous, Consistent, and Complete principles [17] [16]. |

| Electronic Lab Notebook (ELN) / LIMS | A centralized software system for managing samples, associated data, and workflows. It structures data entry, controls user access, and maintains records, inherently supporting all ALCOA+ principles [14] [20]. |

The implementation of the ALCOA+ framework is a fundamental prerequisite for any robust method validation and precision-accuracy verification research. It transforms data from mere numbers into reliable, evidence-based knowledge. As the industry moves towards greater digitalization and faces new challenges with complex data types, including those generated by AI, the principles of ALCOA+ remain the constant foundation [19] [13]. For researchers and drug development professionals, embedding these principles into the fabric of daily laboratory practice is not just a regulatory necessity—it is a core component of scientific excellence and a critical factor in bringing safe and effective therapies to patients.

Integrating ICH Q9 Quality Risk Management (QRM) principles into analytical method lifecycle management represents a fundamental shift from reactive compliance to a proactive, science-based framework for ensuring data integrity and product quality. The ICH Q9 guideline, overseen by the International Council for Harmonisation of Technical Requirements for Pharmaceuticals for Human Use, provides a systematic process for assessing, controlling, communicating, and reviewing risks that could compromise pharmaceutical product quality [21]. When applied to analytical method lifecycle management—spanning development, validation, routine use, and eventual retirement—this risk-based approach enables organizations to focus resources on method parameters and procedural elements most critical to patient safety and product efficacy.

A robust QRM process, as defined by ICH Q9, is built upon four core components: Risk Assessment, Risk Control, Risk Communication, and Risk Review [22] [21]. This cyclical process ensures that method performance is continuously monitored and maintained throughout its operational lifespan. The recent Q9(R1) revision further strengthens implementation by emphasizing that the degree of formality in risk management should be commensurate with the level of risk, providing crucial guidance for prioritizing efforts based on potential impact to product quality and patient safety [22].

Core Principles of ICH Q9

The Four Components of Quality Risk Management

The ICH Q9 framework establishes a structured, cyclical process for managing quality risks throughout the analytical method lifecycle. This systematic approach ensures consistent application across all stages of method development, validation, and implementation.

Risk Assessment: This foundational component involves a systematic process of risk identification, analysis, and evaluation. It begins with identifying potential hazards or "what could go wrong" with an analytical method, followed by analysis of the potential causes and consequences, and concludes with evaluation against risk criteria [21]. For analytical methods, this typically involves structured tools like Failure Mode Effects Analysis (FMEA) which assesses potential failure modes based on their severity, probability of occurrence, and detectability [22] [21]. The output enables prioritization of high-risk areas requiring immediate control measures.

Risk Control: This component focuses on implementing measures to reduce risks to acceptable levels. Risk control includes risk reduction actions (such as method optimization, additional system suitability tests, or enhanced training) and formal risk acceptance for residual risks that fall within predefined acceptable limits [21]. The Q9(R1) revision specifically emphasizes that risk acceptance decisions must be clearly documented, particularly when they could impact product availability or patient safety [22].

Risk Communication: This ensures transparent sharing of risk management activities, outcomes, and decisions across all relevant stakeholders [21]. For analytical methods, this includes documenting and communicating method limitations, residual risks, and special handling requirements to laboratory analysts, quality assurance, and regulatory affairs personnel. Effective communication ensures all parties understand their roles in maintaining method control.

Risk Review: This final component establishes that risk management is an ongoing process rather than a one-time activity [21]. Regular reviews of method performance metrics—including out-of-specification (OOS) rates, system suitability failure trends, and investigation reports—ensure that risk controls remain effective throughout the method's lifecycle [22]. The review process should be triggered by significant events such as method-related deviations, changes in instrumentation, or transfer to new laboratories.

Key Q9(R1) Revisions and Their Impact

The 2023/2024 revision to ICH Q9 (Q9(R1)) introduced crucial clarifications that directly impact how risk management should be applied to analytical methods, with particular emphasis on managing subjectivity and determining appropriate formality.

Managing Subjectivity: Q9(R1) stresses the need to minimize inherent subjectivity in risk scoring, which directly impacts the reliability of Risk Priority Number (RPN) calculations (Severity × Probability × Detectability) [22]. For analytical methods, this means implementing clearly defined, auditable rating criteria for all elements of the RPN. Regulatory inspectors will challenge QRM outcomes where scoring scales are not consistently applied across different methods or departments. Compliance requires establishing cross-functional QRM teams with representatives from Quality, Process Engineering, Regulatory, and Production to pool expertise and mitigate individual bias [22].

Degree of Formality: The revision mandates that the level of effort, formality, and documentation must correspond to the level of risk to quality and patient safety [22]. This principle is particularly relevant for analytical methods, where the same exhaustive FMEA process should not be applied equally to a compendial method verification and a novel bioanalytical method development. Organizations must define and document a Quality Risk Management Plan that clearly outlines triggers for Formal QRM (requiring cross-functional teams, established tools like FMEA, and standalone reports) versus Informal QRM (using simple techniques with rationale documented within the Quality System) [22].

Table: Determining Level of Formality in Method Risk Management

| Factor | High (Formal QRM Required) | Low (Informal QRM Acceptable) |

|---|---|---|

| Uncertainty | Lack of knowledge about hazards (e.g., new analytical technology, complex OOS) | Good knowledge; easily answer 'what can go wrong' (e.g., minor method adjustment) |

| Importance | High degree of importance relative to product quality (e.g., release method for potency) | Low degree of importance (e.g., in-process test not impacting product quality) |

| Complexity | Highly complex method (e.g., cell-based bioassay, novel technology platform) | Low complexity, well-understood method (e.g., pH measurement) |

Implementing ICH Q9 in Method Lifecycle Management

Method Development and Validation Phase

The integration of QRM begins during method development, where risk assessment tools systematically identify and control variables that could impact method performance. A science-based approach to establishing method acceptance criteria ensures they are fit-for-purpose and proportional to the risk associated with the method's intended use.

Traditional approaches to evaluating method goodness relied heavily on % coefficient of variation (CV) and % recovery, which have significant limitations as they evaluate method performance independent of product specification limits [23]. A modern, risk-based approach instead evaluates method error relative to the product specification tolerance or design margin, answering the critical question: "How much of the specification tolerance is consumed by the analytical method?" [23] This directly links method performance to its impact on out-of-specification (OOS) rates and batch release decisions.

Table: Risk-Based Acceptance Criteria for Analytical Methods

| Validation Parameter | Traditional Approach | Risk-Based Approach (as % Tolerance) | Bioassay Recommendation |

|---|---|---|---|

| Repeatability | % CV relative to mean | ≤ 25% of tolerance | ≤ 50% of tolerance |

| Bias/Accuracy | % Recovery relative to theoretical | ≤ 10% of tolerance | ≤ 10% of tolerance |

| Specificity | Visual demonstration | ≤ 5-10% of tolerance (Excellent-Acceptable) | Similar small % of tolerance |

| LOD | Signal-to-noise ratio | ≤ 5-10% of tolerance (Excellent-Acceptable) | Case-by-case evaluation |

| LOQ | Signal-to-noise ratio | ≤ 15-20% of tolerance (Excellent-Acceptable) | Case-by-case evaluation |

The relationship between method performance and product quality can be mathematically represented to quantify risk. The reportable result from any analytical method is influenced by both the product itself and the method variability [23]:

- Reportable Result = Test sample true value + Method Bias + Method Repeatability [23]

This equation demonstrates that method error directly impacts the ability to make correct batch release decisions. When method variability consumes an excessive portion of the specification range, the probability of OOS results increases significantly, even for products that are truly within specifications [23].

Experimental Protocol: Tolerance-Based Method Validation

A robust, risk-based method validation protocol should incorporate the following elements to ensure method performance is evaluated relative to its impact on product quality decisions:

Sample Preparation: Prepare a minimum of 6 replicates at 100% of target concentration using actual drug product matrix. Include additional samples at 80% and 120% of target to evaluate performance across the specification range [23].

Reference Standard Qualification: Use qualified reference standards with certificates of analysis documenting purity and storage requirements. Include system suitability tests aligned with method capabilities.

Data Collection and Analysis:

- Calculate method repeatability as standard deviation of the 6 replicates

- Compute Repeatability % Tolerance = (Standard Deviation × 5.15)/(USL - LSL) for two-sided specifications [23]

- Compare against the acceptance criterion of ≤ 25% of tolerance (or ≤ 50% for bioassays)

- For accuracy, calculate Bias % of Tolerance = Bias/Tolerance × 100 with acceptance criterion of ≤ 10%

Statistical Evaluation: Establish a Linearity Range using studentized residuals from regression analysis. The method is considered linear as long as studentized residuals remain within ±1.96 boundaries [23].

This tolerance-based approach directly links method validation to product quality risks, enabling science-based justification of acceptance criteria and providing clear understanding of how method performance impacts OOS rates.

Knowledge Management and Ongoing Monitoring

Knowledge Management (KM) serves as the foundation for effective risk-based method lifecycle management, transforming risk assessment from subjective opinion to objective, data-driven decisions [22]. The relationship between KM inputs and QRM outputs creates a continuous improvement cycle that maintains method robustness throughout its operational lifespan.

Table: Knowledge Management Inputs for Method Risk Management

| Knowledge Management Input | QRM Application | Impact on Method Lifecycle |

|---|---|---|

| Annual Product Review (APR) Trends | Assign Probability scores in RPN based on actual historical method failure rates | Replaces subjective estimates with data-driven risk probabilities |

| Method Transfer History | Identifies and re-assesses risks for methods transferred between sites or laboratories | Highlights site-specific implementation risks |

| Deviation and CAPA Effectiveness Data | Verifies that mitigation actions successfully reduced risk as predicted during Risk Review | Demonstrates effectiveness of prior risk control measures |

| Method Development Studies | Provides scientific basis for determining Severity and defining Critical Method Parameters (CMPs) | Establishes proven acceptable ranges for method parameters |

The critical link between knowledge management and risk management becomes evident in the Risk Review phase, where method performance data validates initial risk assessments and drives continuous improvement. Modern electronic Quality Management Systems (eQMS) and Laboratory Information Management Systems (LIMS) enable automated tracking of method performance metrics, creating a living risk profile that updates as new data becomes available [22]. This dynamic approach ensures that method risks are continually re-evaluated based on actual performance rather than remaining static after initial validation.

Comparative Analysis: Traditional vs. Risk-Based Approaches

Experimental Data and Performance Metrics

A comparative analysis of traditional versus risk-based approaches to method lifecycle management reveals significant differences in operational outcomes, regulatory compliance, and resource allocation. The tolerance-based method for establishing acceptance criteria provides a scientifically rigorous framework that directly links method performance to product quality risks.

Table: Performance Comparison of Method Validation Approaches

| Evaluation Parameter | Traditional Approach | Risk-Based Approach | Impact on Quality Decision |

|---|---|---|---|

| Acceptance Criteria Basis | Fixed % RSD/CV regardless of product specifications | % of specification tolerance or margin | Directly links method error to OOS risk |

| Resource Allocation | Uniform intensity across all methods | Scalable effort based on risk priority | 30-50% reduction in low-risk method validation efforts |

| OOS Investigation Rate | Higher false OOS due to inappropriate criteria | Scientifically justified criteria reduce false OOS | 40-60% reduction in unnecessary investigations |

| Method Robustness | Focus on point estimates of performance | Understanding of method capabilities across design space | Improved method transfer success rates |

| Regulatory Flexibility | Limited data to support method adjustments | Science-based justification for changes | Enhanced regulatory confidence for post-approval changes |

Experimental data demonstrates that the risk-based approach significantly improves decision-making throughout the method lifecycle. For example, methods validated using tolerance-based acceptance criteria show a 40-60% reduction in unnecessary OOS investigations without compromising product quality [23]. This reduction stems from properly accounting for method variability within the specification range, rather than treating all method errors as equal regardless of their impact on quality decisions.

The Degree of Formality concept introduced in Q9(R1) enables more efficient resource allocation by matching the rigor of the QRM process to the level of risk [22]. High-complexity methods such as cell-based bioassays or novel technology platforms require formal QRM with cross-functional teams and structured tools like FMEA. In contrast, well-understood compendial methods may only require informal QRM with simplified documentation. This risk-proportionate approach typically reduces validation resources for low-risk methods by 30-50% while strengthening controls for high-risk methods [22].

The Scientist's Toolkit: Essential Research Reagent Solutions

Implementing risk-based method lifecycle management requires specific tools and methodologies to effectively identify, assess, and control method risks. The following essential resources form the foundation of a robust QRM program for analytical methods.

Table: Essential Research Reagent Solutions for Method QRM

| Tool/Resource | Function in QRM | Application in Method Lifecycle |

|---|---|---|

| FMEA/FMECA Software | Systematic risk assessment tool for identifying and prioritizing failure modes | Quantifies risk priority numbers (RPN) for method variables; enables data-driven control strategy |

| Statistical Analysis Package | Advanced analytics for method capability assessment and trend analysis | Calculates tolerance-based acceptance criteria; analyzes method robustness across design space |

| Reference Standards | Qualified materials for method accuracy and precision evaluation | Establishes method bias relative to tolerance; supports system suitability testing |

| Design of Experiments (DoE) | Structured approach for understanding method parameter interactions | Maps method design space; identifies critical method parameters requiring tight control |

| Electronic Lab Notebook (ELN) | Documentation platform for risk management activities and decisions | Ensures transparent risk communication; maintains historical risk assessment data |

| Method Validation Protocols | Predefined experimental designs for risk-based method qualification | Standardizes approach to validation; ensures consistent application of QRM principles |

| Stability Testing Systems | Controlled environments for assessing method robustness over time | Provides data for risk review; demonstrates method stability under various conditions |

Integrating ICH Q9 Quality Risk Management into analytical method lifecycle management represents a paradigm shift from standardized compliance to science-based, patient-focused quality assurance. This approach creates a direct linkage between method performance and product quality decisions, enabling organizations to allocate resources effectively while enhancing regulatory confidence. The tolerance-based methodology for establishing acceptance criteria provides a scientifically rigorous framework that acknowledges the real-world impact of method variability on quality decisions.

The Q9(R1) revisions further strengthen this framework by emphasizing appropriate formality and managing subjectivity in risk assessments. When combined with robust knowledge management systems, this creates a dynamic, data-driven approach to method lifecycle management that continuously improves through operational experience. As regulatory authorities increasingly adopt risk-based inspection approaches, organizations with mature QRM integration will benefit from more efficient inspections and greater operational flexibility [22].

For researchers, scientists, and drug development professionals, adopting these risk-based principles represents both a compliance necessity and a strategic opportunity. The resulting methods are not only more robust and reliable but also more economically sustainable throughout the product lifecycle. By building quality into methods through risk-based design rather than relying solely on end-product testing, organizations can achieve higher first-pass success rates, reduce unnecessary investigations, and ultimately deliver safer, more effective medicines to patients.

From Theory to Practice: Modern Method Development and Lifecycle Application

Implementing Quality-by-Design (QbD) in Method Development

The pharmaceutical industry is undergoing a significant transformation in how it ensures product quality, moving away from traditional quality-by-testing approaches toward a more systematic, science-based framework known as Quality by Design (QbD). This paradigm shift, encouraged by regulatory agencies worldwide, emphasizes building quality into products and processes from the beginning rather than relying solely on end-product testing [24]. When applied to analytical method development, the QbD approach creates more robust, reliable, and fit-for-purpose methods that consistently deliver quality data throughout their lifecycle.

The fundamental principle of Analytical Quality by Design (AQbD) is that quality cannot be tested into products but must be designed into the development process. This systematic approach begins with predefined objectives and emphasizes method understanding and control based on sound science and quality risk management [25] [26]. The conventional approach to analytical method validation, which often treats validation as a one-time check-box exercise, is being reimagined through a lifecycle approach that aligns with modern process validation concepts [26]. This article compares the traditional and QbD approaches to method development, providing experimental data and case studies that demonstrate the enhanced performance characteristics of QbD-based methods.

Theoretical Framework: QbD Principles and Terminology

Core Components of the AQbD Framework

The Analytical Quality by Design framework consists of several interconnected components that work together to ensure method robustness and reliability:

Analytical Target Profile (ATP): The ATP is a foundational element that defines the method requirements and performance criteria before development begins. It specifies what the method needs to measure and to what level of performance, including parameters such as precision, accuracy, and sensitivity [26] [27]. The ATP serves as the focal point for all stages of the analytical lifecycle, similar to a user requirement specification for analytical equipment qualification.

Critical Method Attributes (CMAs) and Critical Method Parameters (CMPs): CMAs are the key performance characteristics that must be controlled to ensure the method meets the ATP requirements. CMPs are the variables that significantly impact these attributes [25]. For HPLC methods, typical CMPs include mobile phase composition, buffer pH, column temperature, and flow rate [28].

Method Operable Design Region (MODR): The MODR represents the multidimensional combination and interaction of method variables that have been demonstrated to provide assurance of quality [24]. Operating within the MODR provides flexibility while maintaining method performance.

The Three-Stage Analytical Procedure Lifecycle

A properly implemented AQbD approach follows three distinct stages:

- Stage 1: Method Design - Developing the method based on the ATP through risk assessment and experimental design

- Stage 2: Method Qualification - Demonstrating the method's capability to meet the ATP requirements

- Stage 3: Continued Method Verification - Ongoing monitoring to ensure the method remains in a state of control [26]

This lifecycle approach aligns with the concepts described in USP General Chapter <1220> "Analytical Procedure Lifecycle" and ICH guidelines Q2(R2) and Q14, which provide a modern framework for analytical procedure development and validation [24].

Figure 1: AQbD Workflow showing the systematic relationship between key components across the method lifecycle stages.

Comparative Analysis: Traditional vs. QbD Approach

Fundamental Differences in Philosophy and Implementation

The traditional approach to analytical method development relies heavily on trial-and-error experimentation and one-factor-at-a-time (OFAT) optimization. In contrast, the QbD approach employs systematic, risk-based development with multivariate experimentation [27]. This fundamental difference in philosophy leads to significant variations in methodology, documentation, and long-term performance.

Traditional method development typically focuses on satisfying regulatory requirements as a checklist exercise, with limited understanding of method robustness and ruggedness. The method validation is often treated as a one-time event performed after development is complete, with knowledge transfer to quality control laboratories frequently being problematic [26].

QbD-based method development emphasizes scientific understanding and risk management throughout the method lifecycle. The approach identifies and controls critical method parameters, establishes a method operable design region, and implements continuous verification to ensure ongoing method performance [24].

Table 1: Comprehensive Comparison Between Traditional and QbD-Based Method Development Approaches

| Aspect | Traditional Approach | QbD Approach |

|---|---|---|

| Development Strategy | Trial-and-error, one-factor-at-a-time | Systematic, risk-based, multivariate design |

| Validation Focus | Regulatory compliance, check-box exercise | Method understanding, fitness-for-purpose |

| Knowledge Management | Limited transfer of tacit knowledge | Comprehensive knowledge space definition |

| Robustness Assessment | Often evaluated after validation | Built into development phase using DoE |

| Regulatory Flexibility | Limited, changes require regulatory submission | Enhanced within established design space |

| Lifecycle Perspective | Focus on one-time validation | Continuous verification and improvement |

| Control Strategy | Fixed operating conditions | Flexible within method operable design region |

| Resource Investment | Lower initial investment, potential rework | Higher initial investment, reduced failures |

Impact on Method Performance and Business Outcomes

The QbD approach to analytical method development delivers tangible benefits in method performance and business efficiency. Methods developed using QbD principles demonstrate superior robustness when transferred between laboratories, reduced out-of-specification (OOS) results during routine use, and greater flexibility to accommodate changes in materials or equipment [26].

From a business perspective, the initial investment in systematic development is offset by reduced method failures, fewer investigations, and more efficient technology transfers. Regulatory flexibility within the approved design space also allows for continuous improvement without additional submissions [24] [27].

Experimental Protocols and Case Studies

QbD-Based HPLC Method Development for Treprostinil

A recent study demonstrates the application of AQbD principles to develop a stability-indicating RP-HPLC method for treprostinil, a drug used to treat pulmonary arterial hypertension [25]. The method was developed using a central composite design (CCD) to model the relationship between critical method parameters and observed responses.

Experimental Protocol:

- ATP Definition: The method requirements included separation of treprostinil from degradation products, precise quantification (RSD < 2%), and runtime under 10 minutes.

- Risk Assessment: Initial risk identification using Ishikawa diagram identified flow rate, buffer composition, and column temperature as high-risk factors.

- DoE Implementation: A central composite design was employed with three factors at multiple levels, analyzing responses including retention time, theoretical plates, and tailing factor.

- MODR Establishment: The method operable design region was defined for buffer concentration (0.01N KH₂PO₄), mobile phase ratio (36.35:63.35 v/v buffer:diluent), and column temperature (31.4°C).

- Control Strategy: System suitability tests were established to ensure method performance within the MODR.

Results: The optimized method achieved excellent separation with treprostinil eluting at 2.579 minutes within a 6-minute runtime. The method demonstrated precision (RSD = 0.4%), robustness (RSD < 2%), and effectively separated treprostinil from degradation products under various forced degradation conditions [25].

QbD for Metformin Hydrochloride HPLC Method

Another study applied QbD principles to develop an HPLC method for metformin hydrochloride in tablet dosage forms [29]. The researchers used a two-factor, three-level design with buffer pH and mobile phase composition as independent factors.

Experimental Protocol:

- Factor Screening: Risk assessment identified buffer pH and mobile phase composition as critical parameters.

- Response Surface Methodology: Central composite design was used to study the effects on retention time, peak area, and symmetry factor.

- Optimization: Desirability function was applied to simultaneously optimize multiple analytical attributes.

- Validation: The optimized method was validated according to ICH guidelines.

Results: The optimal conditions consisted of 0.02M acetate buffer (pH 3) and methanol (70:30 v/v) at a flow rate of 1 mL/min. The method was successfully applied for content evaluation and dissolution studies of metformin hydrochloride tablets [29].

Ceftriaxone Sodium HPLC Method Development

A QbD-based approach was also implemented for developing an HPLC method for ceftriaxone sodium in pharmaceutical dosage forms [28]. The researchers applied central composite design to optimize mobile phase composition and pH, with responses including retention time, theoretical plates, and peak asymmetry.

Results: The optimized method used a Phenomenex C-18 column with mobile phase acetonitrile to water (70:30 v/v, pH 6.5 with 0.01% triethylamine) at 1 mL/min flow rate. The method showed excellent linearity (r² = 0.991) across 10-200 μg/mL range, with system suitability parameters within acceptable limits (tailing factor = 1.49, theoretical plates = 5236) [28].

Table 2: Performance Comparison of QbD-Developed HPLC Methods Across Multiple APIs

| Drug Compound | Experimental Design | Optimized Conditions | Method Performance |

|---|---|---|---|

| Treprostinil [25] | Central Composite Design | Buffer (0.01N KH₂PO₄):Diluent (36.35:63.35 v/v), 31.4°C | Retention time: 2.579 min, Precision: 0.4% RSD |

| Metformin HCl [29] | Central Composite Design | Acetate buffer pH 3:Methanol (70:30 v/v), 1 mL/min | Successful application to content uniformity and dissolution |

| Ceftriaxone Sodium [28] | Central Composite Design | Acetonitrile:Water (70:30 v/v), pH 6.5, 1 mL/min | Linearity: r² = 0.991, Tailing factor: 1.49 |

| Remogliflozin Etabonate & Vildagliptin [30] | Box-Behnken Design | 10 mM KH₂PO₄ buffer pH 3:Methanol (10:90 v/v), 0.8 mL/min | %RSD < 2.0, sensitive LOD and LOQ |

| Tafamidis Meglumine [31] | Box-Behnken Design | 0.1% ortho-phosphoric acid in methanol:acetonitrile (50:50 v/v) | Retention time: 5.02 ± 0.25 min, Linearity: R² = 0.9998 |

The Scientist's Toolkit: Essential Reagents and Solutions

Successful implementation of AQbD requires specific reagents, instruments, and software solutions. The following table summarizes key components used in the case studies discussed in this article.

Table 3: Essential Research Reagent Solutions for QbD-Based HPLC Method Development

| Item Category | Specific Examples | Function in AQbD |

|---|---|---|

| Chromatographic Columns | Agilent Express C18, Phenomenex ODS Hypersyl, Cosmosil C18, Qualisil BDS C18 | Stationary phase for separation; column chemistry is a critical method parameter |

| Buffer Systems | Potassium dihydrogen phosphate (KH₂PO₄), Acetate buffer, Ortho-phosphoric acid | Mobile phase component controlling pH and ionic strength; critical for reproducibility |

| Organic Modifiers | HPLC-grade Methanol, Acetonitrile | Mobile phase components affecting retention and selectivity; optimized in DoE |

| Design Software | Design-Expert, Minitab, MODDE | Statistical design and analysis of experiments; enables multivariate optimization |

| HPLC Systems | Agilent HPLC with PDA detector, Waters Alliance Systems, Shimadzu Prominence | Instrument platform; detector selection impacts sensitivity and specificity |

| Column Heaters | Thermostatted column compartments | Control column temperature as critical parameter affecting retention and efficiency |

| pH Meters | Mettler Toledo with combination electrodes | Precise pH adjustment of mobile phases; critical for reproducibility |

| Ultrasonication Baths | Bandelin Sonorex, Branson Ultrasonic | Mobile phase degassing and sample dissolution; prevents bubble formation |

Analytical Method Validation Within QbD Framework

Enhanced Validation Approach

In the QbD paradigm, method validation is not a one-time event but an integral part of the method lifecycle. The enhanced approach focuses on demonstrating that the method is fit-for-purpose based on the predefined ATP [26]. Method qualification (Stage 2) confirms the method's capability to meet the ATP requirements under routine operating conditions.

The validation strategy incorporates knowledge gained during method design, including understanding of the MODR and control strategy. This comprehensive approach typically includes assessment of accuracy, precision, specificity, linearity, range, detection and quantitation limits, and robustness—but with greater scientific rationale behind acceptance criteria [26].

Continued Method Performance Verification

Stage 3 of the method lifecycle involves ongoing assurance that the method remains in a state of control during routine use. This includes continuous monitoring of system suitability test results, periodic assessment of method performance through quality control charting, and investigation of any trends or deviations [26].

The continued verification activities provide data to support method improvements and ensure the method remains fit-for-purpose throughout its lifecycle. This aligns with modern quality systems that emphasize continuous improvement rather than static validation states.

Regulatory Perspective and Future Directions

Evolving Regulatory Landscape

Regulatory agencies worldwide are encouraging the adoption of QbD principles for pharmaceutical development, including analytical methods. The International Council for Harmonisation (ICH) has developed two draft guidelines—ICH Q14 on analytical procedure development and ICH Q2(R2) on analytical procedure validation—that describe QbD principles for analytical methods [24].

The United States Pharmacopeia (USP) has developed General Chapter <1220> "Analytical Procedure Lifecycle" that provides a comprehensive framework for implementing AQbD concepts [24]. This chapter describes a holistic approach for managing the analytical procedure lifecycle and serves as a valuable resource for industry and regulators.

Integration with Green Chemistry Principles

A growing trend in AQbD is the integration of green chemistry principles with method development. Several recent studies have incorporated assessment of method environmental impact using tools such as the Green Analytical Procedure Index (GAPI) and Blue Applicability Grade Index (BAGI) [25] [31].

The treprostinil method development study, for example, reported a GAPI score of 83, classifying the method as environmentally friendly [25]. Similarly, the tafamidis meglumine method achieved an AGREE score of 0.83, indicating high environmental sustainability [31]. This integration of green principles with QbD represents the future of analytical method development in the pharmaceutical industry.

The implementation of Quality-by-Design in analytical method development represents a significant advancement over traditional approaches. The systematic, science-based framework of AQbD results in more robust, reliable, and fit-for-purpose methods that consistently deliver quality data throughout their lifecycle.

Experimental data from multiple case studies demonstrates that QbD-developed methods exhibit superior performance characteristics, including enhanced robustness, reduced method failures, and greater regulatory flexibility. While requiring greater initial investment in development, the AQbD approach ultimately delivers long-term benefits through reduced investigations, more efficient technology transfers, and continuous improvement opportunities.

As the pharmaceutical industry continues to embrace QbD principles, the integration of AQbD with green chemistry concepts and digital transformation initiatives will further enhance the sustainability, efficiency, and reliability of analytical methods. The ongoing evolution of regulatory guidelines supports this paradigm shift, positioning AQbD as the standard for analytical method development in modern pharmaceutical quality systems.

Leveraging Design of Experiments (DoE) for Efficient Optimization

In the rigorous world of pharmaceutical development, establishing robust analytical methods is paramount to ensuring drug safety, efficacy, and quality. Method validation—the process of proving that an analytical procedure is suitable for its intended purpose—relies on definitive evidence of precision, accuracy, and robustness. Traditionally, this was achieved through One-Factor-at-a-Time (OFAT) approaches, which are not only resource-intensive but also fail to detect interactions between critical method parameters [32]. Within this context, Design of Experiments (DoE) has emerged as a superior statistical framework for efficient optimization. DoE is a systematic, multipurpose tool that enables researchers to investigate the simultaneous impact of multiple factors on key analytical responses, thereby building a deep understanding of the method's performance and its limitations [33]. By integrating DoE into method validation strategies, scientists can move beyond simple verification to a state of profound, science-based process knowledge, aligning perfectly with modern regulatory paradigms like Quality by Design (QbD) [34] [35]. This guide objectively compares the performance of different DoE optimization criteria, providing experimental data and protocols to inform researchers and drug development professionals in their pursuit of efficient and reliable method optimization.

Comparative Analysis of DoE Optimization Criteria

Selecting the appropriate optimization criterion is a critical first step in designing an efficient experiment. Different criteria are designed to achieve different primary objectives, such as precise parameter estimation or accurate prediction. The table below provides a structured comparison of the fundamental and advanced DoE optimization criteria to guide this selection.

Table: Comparison of DoE Optimization Criteria and Their Applications

| Criterion | Primary Objective | Key Mathematical Focus | Best-Suited Application in Method Validation |

|---|---|---|---|

| D-optimality | Maximize overall information gain for parameter estimation | Maximize the determinant of the information matrix, ( \max \lvert X^T X \rvert ) [36] | Screening experiments to identify Critical Method Parameters (CMPs) from a large set of variables [33] [36]. |

| A-optimality | Minimize the average variance of parameter estimates | Minimize the trace of the inverse information matrix, ( \min \, \text{tr}[(X^T X)^{-1}] ) [36] | When reliable estimates for all method factors are equally important, and the goal is balanced precision. |

| E-optimality | Control the worst-case variance among parameter estimates | Minimize the maximum eigenvalue of ( (X^T X)^{-1} ) [36] | Ensuring that the least precisely estimated method parameter still meets a pre-defined level of precision. |

| G-optimality | Minimize the maximum prediction variance across the design space | ( \min \, \max_{x \in X} x^T (X^T X)^{-1} x ) [36] | Robustness testing of an analytical method, guaranteeing reliable predictions under worst-case conditions. |

| V-optimality | Minimize the average prediction variance over the design space | ( \min \int_{x \in X} x^T (X^T X)^{-1} x \,dx ) [36] | Optimizing a method for overall reliable performance across its entire operational range. |

| Space-filling | Ensure uniform coverage and exploration of the design space | Geometric and distance-based criteria (e.g., Latin Hypercube) [36] | Developing methods for complex, non-linear processes or when the underlying model is unknown. |

Interpretation of Comparative Data

The choice of criterion involves inherent trade-offs. While D-optimal designs are highly efficient for factor screening, they may not provide uniform precision across the design space [36]. Conversely, A-optimal designs ensure more balanced precision for all parameters but may require more experimental runs to achieve it. For method robustness studies, where proving consistent performance under small, deliberate variations is key (as required by ICH guidelines [37]), G-optimality is particularly valuable as it safeguards against the highest prediction error. When the goal is to establish a Method Operational Design Range (MODR) within a QbD framework, V-optimality or space-filling designs are often preferred for their ability to model the entire method operating space comprehensively [38].

Experimental Protocols for DoE in Analytical Method Validation

The following section outlines detailed methodologies for applying DoE in two common method validation scenarios: a screening experiment and a robustness study.

Protocol 1: Screening Study for an HPLC Method

This protocol aims to identify the Critical Process Parameters (CPPs) affecting the Critical Quality Attributes (CQAs) of a new High-Performance Liquid Chromatography (HPLC) method for drug assay.

- Objective: To identify from a set of five potential factors which ones significantly impact key chromatographic responses (peak area, resolution, and tailing factor).

- Factors and Levels:

- A: Mobile Phase pH (±0.2)

- B: Column Temperature (±5°C)

- C: Flow Rate (±0.1 mL/min)

- D: Gradient Time (±5%)

- E: Detection Wavelength (±5 nm)

- DoE Design Selection: A D-optimal design is selected for its ability to screen many factors with a minimal number of experimental runs, maximizing information gain efficiently [33] [36].

- Experimental Procedure:

- Sample Preparation: Prepare a standard solution of the drug substance and its known impurities in the required matrix.

- DoE Run Execution: Use an automated liquid handler (e.g., dragonfly discovery) to set up the HPLC runs according to the D-optimal design matrix. Automation enhances precision and reproducibility for complex assay setups [39].

- Data Collection: For each experimental run, record the CQAs: Peak Area (for accuracy), Resolution (for specificity), and Tailing Factor (for peak shape).

- Statistical Analysis: Fit a linear model to the data and perform Analysis of Variance (ANOVA) to identify factors and interactions with statistically significant effects (p-value < 0.05) on each response.

Protocol 2: Robustness Testing of a UV-Vis Spectrophotometric Method

This protocol uses a robustness study to demonstrate that a dissolution test method remains unaffected by small, deliberate variations in method parameters.

- Objective: To verify the method's robustness by evaluating the impact of minor parameter changes on the measured absorbance and calculated drug concentration.

- Factors and Levels:

- A: Wavelength (±2 nm)

- B: pH of Buffer (±0.05)

- C: Sonication Time (±10%)

- DoE Design Selection: A Full Factorial design (2³) with 3 center points is used. This design is ideal for robustness testing as it thoroughly explores a defined, narrow factor space and allows for the detection of curvature, providing definitive proof of method resilience [37].

- Experimental Procedure:

- Sample Preparation: Prepare dissolution samples according to the standard procedure.

- DoE Run Execution: Perform the UV-Vis analysis according to the 11-run factorial design (8 factorial points + 3 center points).

- Data Collection: Record the absorbance and the calculated % drug release for each run.

- Statistical Analysis:

- Analyze the main effects and interactions of the factors on the response.

- The method is considered robust if none of the factors show a statistically significant effect on the response at a predetermined significance level (e.g., p > 0.05), and the relative standard deviation (RSD) at the center points is within the acceptance criteria (e.g., <2%) [37].

Workflow Visualization: Implementing DoE for Method Validation

The following diagram illustrates the logical workflow for applying DoE in the context of analytical method development and validation, integrating QbD principles.

DoE Implementation Workflow for Method Validation

The Scientist's Toolkit: Essential Research Reagent Solutions

Successful execution of a DoE requires precise control over experimental conditions and materials. The following table details key reagents and instruments critical for conducting the experimental protocols described in this guide.

Table: Essential Research Reagents and Instruments for DoE Studies

| Item Name | Function / Role in DoE | Critical Specifications |

|---|---|---|

| Pharmaceutical Reference Standards | Serves as the "accepted reference value" for calculating accuracy (%bias/%recovery) [40]. | USP-grade API; certified purity and concentration. |

| Chromatography Columns | The stationary phase for separation; a critical factor in HPLC/UPLC method development. | Specified chemistry (C8, C18), particle size, dimensions, and lot-to-lot consistency. |