Navigating Data Integration Challenges in High-Throughput Informatics: 2025 Strategies for Biomedical Research

This article examines the critical data integration challenges facing researchers and drug development professionals working with high-throughput informatics infrastructures.

Navigating Data Integration Challenges in High-Throughput Informatics: 2025 Strategies for Biomedical Research

Abstract

This article examines the critical data integration challenges facing researchers and drug development professionals working with high-throughput informatics infrastructures. It explores the foundational technical and organizational barriers, including data silos, quality issues, and skills gaps that undermine research efficacy. The content provides methodological frameworks for integrating heterogeneous data sources, troubleshooting common integration failures, and validating integrated datasets. With the pharmaceutical industry projected to spend $3 billion on AI by 2025 and facing 85% big data project failure rates, this guide offers evidence-based strategies to enhance data interoperability, accelerate discovery timelines, and improve translational outcomes in precision medicine.

The Data Integration Crisis in High-Throughput Research: Understanding Core Challenges and Impacts

Troubleshooting Guide: Data Integration and Management

Frequently Asked Questions (FAQs)

Q1: What is the typical success rate for digital transformation and data integration initiatives in life sciences and healthcare? A1: Digital transformation initiatives face significant challenges. Industry-wide, only 35% of digital transformation initiatives achieve their objectives, with studies reporting failure rates for digital transformation projects as high as 70% [1]. In life sciences, a gap exists between investment and organizational transformation; while 98.8% of Fortune 1000 companies invest in data initiatives, only 37.8% have successfully created data-driven organizations [1].

Q2: What are the primary data quality challenges affecting high-throughput research? A2: Data quality is the dominant barrier, with 64% of organizations citing it as their top data integrity challenge [1]. Furthermore, 77% of organizations rate their data quality as average or worse, a figure that has deteriorated from previous years [1]. Poor data quality has a massive economic impact, with historical estimates suggesting poor data quality costs US businesses $3.1 trillion annually [1].

Q3: How do data integration failures directly impact research and development productivity? A3: Data integration failures directly undermine R&D efficiency. Declining R&D productivity is a significant industry concern, with 56% of biopharma executives and 50% of medtech executives reporting that their organizations need to rethink R&D and product development strategies [2]. The failure rates for large-scale data projects are particularly high, with industry research showing 85% of big data projects fail [1].

Q4: What is the economic impact of data silos and poor integration in a research organization? A4: Data silos and poor integration create substantial, measurable costs. Research indicates that data silos cost organizations $7.8 million annually in lost productivity [1]. Employees waste an average of 12 hours weekly searching for information across disconnected systems [1]. The problem is pervasive; organizations average 897 applications, but only 29% are integrated [1].

Q5: What percentage of AI and GenAI initiatives face scaling challenges, and why? A5: The majority of AI initiatives struggle to transition from pilot to production. Currently, 74% of companies struggle to achieve and scale AI value despite widespread adoption [1]. Integration issues are the primary barrier, with 95% of IT leaders reporting integration issues preventing AI implementation [1]. For GenAI specifically, 60% of companies with $1B+ revenue are 1-2 years from implementing their first GenAI solutions [1].

Troubleshooting Common Data Integration Failures

Problem: Inaccessible Data and Poor Integration Between Systems

- Symptoms: Inability to locate datasets, manual data curation delays, inconsistent results from different systems.

- Diagnosis: Conduct a comprehensive audit of data sources and integration points. The organization likely has a high ratio of unintegrated applications.

- Solution: Implement a centralized data storage system and establish strong data governance policies that define how data should be stored, managed, and accessed [3].

Problem: Poor Quality Data Undermining Analysis

- Symptoms: Missing values, inconsistent formatting, errors in analytical outputs, irreproducible results.

- Diagnosis: Profiling of source data reveals inconsistencies in formats, standards, and entry errors from disparate systems.

- Solution: Implement data quality management systems for automated cleansing and standardization. Proactively validate data for errors immediately after collection, before integration into analytical systems [3] [4].

Problem: Security Breaches and Compliance Failures

- Symptoms: Unauthorized data access, regulatory penalties, inability to audit data access trails.

- Diagnosis: Security assessment reveals lack of encryption, inadequate access controls, or non-compliance with regulations like HIPAA or GDPR.

- Solution: Choose data integration platforms with end-to-end security, including encryption (in transit and at rest), data masking for PII, and role-based access controls. Ensure compliance with relevant security certifications [4] [5]. In healthcare, enforcing multi-factor authentication (MFA) is a critical, foundational control [6].

Problem: Failure to Handle Large or Diverse Data Volumes

- Symptoms: Slow processing times, system crashes during large-scale analysis, inability to process real-time data streams.

- Diagnosis: Existing infrastructure is overwhelmed by data volume, velocity, or variety (e.g., genomic sequences, imaging data, sensor outputs).

- Solution: Adopt modern data management platforms with distributed storage and parallel processing. For data ingestion, use incremental loading strategies and streaming processing techniques for real-time data [3] [4].

Failure Rates and Economic Impact in Informatics

Table 1: Digital Transformation and Data Project Failure Metrics

| Metric | Value | Source/Context |

|---|---|---|

| Digital Transformation Success Rate | 35% | Based on BCG analysis of 850+ companies [1] |

| Big Data Project Failure Rate | 85% | Gartner analysis of large-scale data projects [1] |

| System Integration Project Failure Rate | 84% | Integration research across industries [1] |

| Organizations Citing Data Quality as Top Challenge | 64% | Precisely's 2025 Data Integrity Trends Report [1] |

| Data Silos Annual Cost (Lost Productivity) | $7.8 million | Salesforce research on operational efficiency impact [1] |

| Estimated Annual Cost of Poor Data Quality (US) | $3.1 trillion | Historical IBM research on business impact [1] |

Table 2: Industry-Specific Digitalization and Impact Metrics

| Sector/Area | Metric | Value | Source/Context |

|---|---|---|---|

| Financial Services | Digitalization Score | 4.5 (Highest) | Industry digitalization analysis [1] |

| Government | Digitalization Score | 2.5 (Lowest) | Public sector analysis [1] |

| Healthcare Data Breach | Average Cost (US, 2025) | $10.22 million | IBM Report; 9% year-over-year increase [6] |

| Healthcare Data Breach | Average Lifecycle | 279 days | Time to identify and contain an incident [6] |

| AI Implementation | Potential Cost Savings (Medtech) | Up to 12% of total revenue | Within 2-3 years (Deloitte analysis) [2] |

Experimental Protocols for Assessing Data Integration Health

Protocol: Data Integrity and Quality Assessment

Objective: To quantitatively measure data quality across source systems and identify specific integrity issues (completeness, accuracy, consistency, validity). Materials: Source databases, data profiling tool (e.g., OpenRefine, custom Python/Pandas scripts), predefined data quality rules. Procedure:

- Source System Inventory: Catalog all data sources, their owners, update frequencies, and primary keys.

- Data Profiling: Execute profiling scripts against a representative sample from each source to calculate:

- Completeness: Percentage of non-null values for critical fields.

- Uniqueness: Count of duplicate entries based on primary key or business key.

- Consistency: Check for adherence to expected formats (e.g., date YYYY-MM-DD, gene nomenclature).

- Validity: Verify that values fall within expected ranges (e.g., pH between 0-14).

- Cross-System Comparison: Identify records that exist in multiple systems and check for consistency of values (e.g., patient demographic data in EHR vs. clinical trial database).

- Reporting: Generate a data quality scorecard highlighting metrics that fall below acceptable thresholds (e.g., <95% completeness, <99% uniqueness).

Protocol: Data Integration Pipeline Performance Benchmark

Objective: To evaluate the performance and reliability of a data integration pipeline (e.g., ETL/ELT process) ingesting high-throughput experimental data. Materials: Test dataset, target data warehouse (e.g., Snowflake, BigQuery), data integration platform (e.g., Airbyte, Workato, custom), monitoring dashboard. Procedure:

- Baseline Establishment: Run the pipeline with a sample dataset of known size (e.g., 10 GB of genomic variant call files - VCFs). Record the time from ingestion to availability in the target system.

- Volume Scaling Test: Incrementally increase the data volume (e.g., 50 GB, 100 GB) and measure the ingestion time, tracking linearity or performance degradation.

- Latency Test: For near real-time sources, measure the latency between a data creation event at the source and its availability for query in the target.

- Failure Recovery Test: Intentionally introduce a source system failure (e.g., stop a database service) and monitor the pipeline's error handling. Restore the source and measure the time to recover and process backlogged data.

- Data Fidelity Check: Perform record counts and checksum comparisons between source and target after each run to ensure no data loss or corruption.

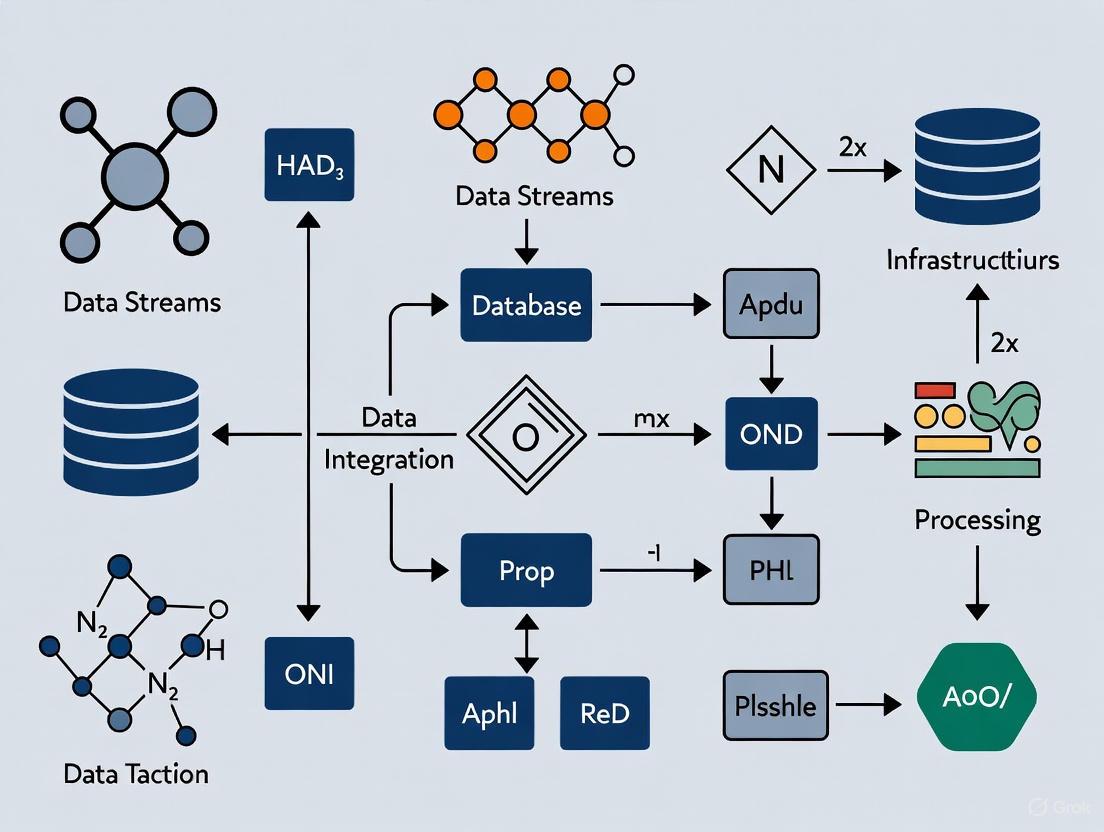

Visualizations

Data Integration Challenge Pathway

Data Integration Health Assessment Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Data Integration in Biomedical Informatics

| Tool / Solution Category | Function / Purpose | Example Use Case |

|---|---|---|

| ETL/ELT Platforms (e.g., Airbyte, Talend) | Extract, Transform, and Load data from disparate sources into a centralized repository. Automates data pipeline creation. | Integrating clinical data from EHRs, genomic data from sequencers, and patient-reported outcomes from apps into a unified research data warehouse [4]. |

| Data Quality Management Systems | Automate data profiling, cleansing, standardization, and validation. Identify errors, duplicates, and inconsistencies. | Ensuring genomic variant calls from different sequencing centers use consistent nomenclature and quality scores before combined analysis [3] [5]. |

| Data Governance & Stewardship Frameworks | Establish policies, standards, and roles for data management. Create a common data language and ensure compliance. | Defining and enforcing standards for patient identifier formats and adverse event reporting across multiple clinical trial sites [3]. |

| Change Data Capture (CDC) Tools | Enable real-time or near-real-time data integration by capturing and replicating data changes from source systems. | Streaming real-time sensor data from ICU monitors into an analytics dashboard for instant clinical decision support [4]. |

| Cloud Data Warehouses (e.g., Snowflake, BigQuery) | Provide scalable, centralized storage for structured and semi-structured data. Enable high-performance analytics on integrated datasets. | Storing and analyzing petabytes of integrated genomic, transcriptomic, and proteomic data for biomarker discovery [4]. |

| 5'-Hydroxy-9(S)-hexahydrocannabinol | 5'-Hydroxy-9(S)-hexahydrocannabinol, MF:C21H32O3, MW:332.5 g/mol | Chemical Reagent |

| 8-pMeOPT-2'-O-Me-cAMP | 8-pMeOPT-2'-O-Me-cAMP, MF:C18H20N5O7PS, MW:481.4 g/mol | Chemical Reagent |

Quantitative Evidence: The Scale of the Data Quality Challenge

The following tables summarize key statistics and financial impacts of poor data quality, underscoring its role as the primary barrier to research and operational efficiency in high-throughput environments.

Table 1: Prevalence and Impact of Poor Data Quality [7]

| Statistic | Value | Context / Consequence |

|---|---|---|

| Primary Data Integrity Challenge | 64% of organizations | Identified data quality as the top obstacle to achieving robust data integrity. |

| Data Distrust | 67% of respondents | Do not fully trust their data for decision-making. |

| Self-Assessed "Average or Worse" Data | 77% of organizations | An eleven percentage point drop from the previous year. |

| Top Data Integrity Priority | 60% of organizations | Have made data quality their top data integrity priority. |

Table 2: Financial and Operational Consequences [8] [9]

| Area of Impact | Consequence | Quantitative / Business Effect |

|---|---|---|

| Return on Investment (ROI) | 295% average ROI | Organizations with mature data implementations report an average 295% ROI over 3 years; top performers achieve 354%. |

| AI Initiative Failure | Primary adoption barrier | 95% of professionals cite data integration and quality as the primary barrier to AI adoption. |

| Data Governance Efficacy | High failure rate | 80% of data governance initiatives are predicted to fail. |

| Operational Inefficiency | Missed revenue, compliance issues | Inaccuracies in core data disrupt targeting strategies, cause compliance issues, and create blind spots for opportunities. |

Table 3: Industry-Specific Data Quality Challenges [9]

| Industry | Data & Analytics Investment / Market Size | Key Data Quality Drivers |

|---|---|---|

| Financial Services | $31.3 billion in AI/analytics (2024) | Real-time fraud detection and risk assessment. |

| Healthcare | $167 billion analytics market by 2030 | Integration demands for patient records, imaging, and IoT medical devices; the sector generates 30% of the world's data. |

| Manufacturing | 29% use AI/ML at facility level | Predictive maintenance and integration of IoT sensor data with production systems. |

| Retail | 25.8% higher conversion rates | Real-time inventory management and customer journey analytics for omnichannel integration. |

Experimental Protocol: Assessing Data Quality in Research Datasets

This protocol provides a standardized methodology for diagnosing data quality issues within high-throughput research data pipelines.

Data Quality Assessment Workflow

Materials and Reagents

Table 4: Research Reagent Solutions for Data Quality Assessment

| Item | Function / Description | Example Tools / Standards |

|---|---|---|

| Data Profiling Tool | Automates the analysis of raw datasets to uncover patterns, anomalies, and statistics. | Open-source libraries (e.g., Great Expectations), custom SQL scripts. |

| Validation Framework | Provides a set of rules and constraints to check data for completeness, validity, and format conformity. | JSON Schema, XML Schema (XSD), custom business rule validators. |

| Deduplication Engine | Identifies and merges duplicate records using fuzzy matching algorithms to ensure uniqueness. | FuzzyWuzzy (Python), Dedupe.io, database-specific functions. |

| Data Cleansing Library | Corrects identified errors through standardization, formatting, and outlier handling. | Pandas (Python), OpenRefine, dbt tests. |

| Metadata Repository | Stores information about data lineage, definitions, and quality metrics for reproducibility. | Data catalogs (e.g., Amundsen, DataHub), structured documentation. |

Step-by-Step Procedure

- Data Profiling: Execute the profiling tool against the target dataset. Key metrics to capture include:

- Completeness: Percentage of non-null values for each critical field.

- Uniqueness: Count of distinct values and detection of exact and fuzzy duplicates in key columns.

- Validity: Proportion of records conforming to predefined formats (e.g., date formats, numeric ranges, allowed string patterns).

- Metric Calculation & Scoring: Synthesize profiling results into a quantitative Data Quality Score. A sample scoring algorithm is:

Data Quality Score = (Completeness_Score * 0.4) + (Uniqueness_Score * 0.3) + (Validity_Score * 0.3)Set a project-specific threshold (e.g., 95%) for proceeding to analysis. - Data Cleansing (Conditional): If the score is below the threshold, initiate cleansing protocols:

- Imputation: Apply rules for handling missing data (e.g., mean imputation, forward-fill, or flagging).

- Deduplication: Run the deduplication engine, manually reviewing high-similarity matches before merging.

- Standardization: Transform data into consistent formats (e.g., standardizing date/time to ISO 8601, normalizing textual descriptors).

- Output: The final output is a quality-assessed dataset, accompanied by a report detailing the quality score, actions taken, and any remaining known data issues for the downstream research team.

Data Integrity Framework for High-Throughput Infrastructures

A proactive, integrated framework is essential for maintaining data integrity. The following diagram illustrates the core components and their logical relationships.

Troubleshooting Guide: Common Data Quality Failures

Problem: Proliferation of Duplicate Records

- Symptoms: Inflated record counts, skewed statistical averages, and inaccurate patient or compound counts.

- Solution: Implement fuzzy matching software that uses algorithms (e.g., Levenshtein distance, phonetic matching) to detect non-exact duplicates. Establish a canonical record golden record resolution strategy to merge duplicates [10] [11].

Problem: Missing or Incomplete Data Fields

- Symptoms: Critical experimental parameters are null, reducing statistical power and introducing bias.

- Solution: Implement real-time validation at the point of data entry or ingestion to enforce mandatory fields. For existing gaps, use data auditing tools to identify patterns in missingness and apply appropriate imputation techniques based on the data context [10] [12].

Problem: Inconsistent Data Formats Across Sources

- Symptoms: Failure to merge datasets from different labs or instruments; parsing errors in automated pipelines.

- Solution: Enforce standardized formats through a canonical schema. Use integration platforms or ETL/ELT pipelines to automatically transform and map disparate data formats (e.g., dates, units of measure) into a unified model [11] [12].

Problem: Inability to Process Data in Real-Time

- Symptoms: Delays in experimental feedback loops; dashboards displaying outdated information.

- Solution: Shift from batch-oriented ETL to modern data pipeline tools that support streaming data and change data capture (CDC). This ensures systems are updated instantly to support real-time decision-making [9] [11].

Frequently Asked Questions (FAQs)

Q1: Why is data quality consistently ranked the top data integrity challenge? Data quality is foundational. Without accuracy, completeness, and consistency, every downstream process—from basic analytics to advanced AI models—produces unreliable and potentially harmful outputs. Recent surveys confirm that 64% of organizations see it as their primary obstacle, as poor quality directly undermines trust, with 67% of professionals not fully trusting their data for critical decisions [7].

Q2: What is the single most effective step to improve data quality in a research infrastructure? Implementing continuous, automated monitoring embedded directly into data pipelines is highly effective. This proactive approach, often part of a DataOps methodology, allows for the immediate detection and remediation of issues like drift, duplicates, and invalid entries, preventing small errors from corrupting large-scale analyses [10] [7].

Q3: How does poor data quality specifically impact AI-driven drug discovery? AI models are entirely dependent on their training data. Poor quality data introduces noise and biases, leading to inaccurate predictive models for compound-target interactions or toxicity. This can misdirect entire research programs, wasting significant resources. It is noted that 95% of professionals cite data integration and quality as the primary barrier to AI adoption [9] [11].

Q4: We have a data governance policy, but quality is still poor. Why? Policy alone is insufficient without enforcement and integration. Successful governance must include dedicated data stewards, clear ownership of datasets, and automated tools that actively enforce quality rules within operational workflows. Reports indicate a high failure rate for governance initiatives that lack these integrated enforcement mechanisms [9] [7].

Q5: What are the key metrics to prove the ROI of data quality investment? Track metrics that link data quality to operational and financial outcomes: reduction in time spent cleansing data manually, decrease in experiment re-runs due to data errors, improved accuracy of predictive models, and the acceleration of research timelines. Mature organizations report an average 295% ROI from robust data implementations, demonstrating significant financial value [9].

Troubleshooting Guides

Guide 1: Resolving Semantic Inconsistencies in Integrated Knowledge Graphs

Problem: After integrating multiple proteomics datasets, queries return conflicting protein identifiers and pathway information, making results unreliable.

Explanation: Semantic inconsistencies occur when data from different sources use conflicting definitions, ontologies, or relationship rules. In knowledge graphs, this manifests as entities with multiple conflicting types or properties that violate defined constraints [13].

Diagnosis:

- Run SHACL validation to identify constraint violations [13]

- Check for entities with multiple disjoint types (e.g., a single resource typed as both :Person and :Airport) [13]

- Verify ontology alignment across integrated sources

- Identify missing or contradictory relationship definitions

Resolution: Method 1: Automated Repair

Method 2: Consistent Query Answering Rewrite queries to filter out inconsistent results without modifying source data [13]

Prevention:

- Implement SHACL shapes validation during ETL processes [13]

- Establish canonical semantic models before integration

- Use OWL reasoning to detect ontological inconsistencies [13]

Guide 2: Handling Mass Spectrometry Data Format Incompatibility

Problem: Unable to process or share large mass spectrometry datasets due to format limitations, slowing collaborative research.

Explanation: Current open formats (mzML) suffer from large file sizes and slow access, while vendor formats lack interoperability and long-term accessibility [14].

Diagnosis:

- Identify format type and version of source files

- Check for missing metadata required for analysis

- Measure processing performance bottlenecks

- Verify instrument data compatibility with analysis tools

Resolution: Method 1: Format Migration to mzPeak

Method 2: Implement Hybrid Storage

- Use efficient binary storage for numerical data [14]

- Maintain human-readable metadata for compliance [14]

- Enable random access to spectra and chromatograms [14]

Prevention:

- Adopt emerging mzPeak format for new experiments [14]

- Implement PSI-MS controlled vocabulary consistently [14]

- Archive both raw and processed data in standard formats

Guide 3: Integrating Legacy Chromatography Data Systems

Problem: Historical chromatography data trapped in proprietary or legacy systems cannot be used with modern analytics pipelines.

Explanation: Legacy CDS often use closed formats, outdated interfaces, and lack API connectivity, creating data silos that hinder high-throughput analysis [15] [16].

Diagnosis:

- Inventory legacy systems and data formats

- Identify vendor-specific data access requirements

- Check for missing metadata and audit trails

- Assess data volume and migration complexity

Resolution: Method 1: Vendor-Neutral CDS Implementation

- Deploy interoperable CDS (e.g., OpenLAB, Chromeleon) [16]

- Use standardized drivers for multi-vendor instrument control [16]

- Implement central data storage with compliance features [16]

Method 2: Data Virtualization

- Create unified data access layer without physical migration

- Use abstraction to present legacy data as modern formats

- Maintain real-time access to original systems

Prevention:

- Select vendor-neutral, interoperable CDS for new instruments [16]

- Implement regular data export to standard formats

- Establish data governance policies for long-term accessibility

Frequently Asked Questions

Q1: How can we ensure data quality when integrating heterogeneous proteomics formats?

Use the ProteomeXchange consortium framework with standardized submission requirements. Implement automated validation using mzML for raw spectra and mzIdentML/mzTab for identification results. Leverage PRIDE database for archival storage and cross-referencing with UniProt for protein annotation [17].

Q2: What strategies exist for real-time data integration in high-throughput environments?

Adopt Change Data Capture (CDC) patterns to identify and propagate data changes instantly. Implement data streaming architectures using platforms like Apache Kafka for continuous data flows. Use event-driven architectures (adopted by 72% of organizations) for real-time responsiveness [18] [9].

Q3: How do we maintain regulatory compliance (GDPR, HIPAA, 21 CFR Part 11) during integration?

Embed compliance protocols directly into data integration workflows. Implement encryption, role-based access controls, and comprehensive audit trails. Use active metadata to automate compliance reporting and maintain data lineage [11] [19]. For CDS, ensure systems provide data integrity features meeting 21 CFR Part 11 [15].

Q4: What are the practical solutions for semantic mismatches across omics databases?

Establish canonical schemas and mapping rules using standardized ontologies. Implement semantic validation with SHACL constraints to identify inconsistencies. Use knowledge graphs with OWL reasoning to infer relationships while maintaining consistency checks through SHACL validation [13].

Q5: How can we overcome performance bottlenecks with large-scale MS data?

Migrate from XML-based formats to hybrid binary formats like mzPeak. Implement efficient compression and encoding schemes. Use cloud-native architectures with scalable storage and processing. Enable random access to spectra and chromatograms rather than sequential file parsing [14].

Table 1: Data Integration Market Trends & Performance Metrics

| Category | 2024 Value | 2030 Projection | CAGR | Key Findings |

|---|---|---|---|---|

| Data Integration Market | $15.18B [9] | $30.27B [9] | 12.1% [9] | Driven by cloud adoption and real-time needs |

| Streaming Analytics | $23.4B (2023) [9] | $128.4B [9] | 28.3% [9] | Outpaces traditional integration growth |

| Healthcare Analytics | $43.1B (2023) [9] | $167.0B [9] | 21.1% [9] | 30% of world's data generated in healthcare |

| Data Pipeline Tools | - | $48.33B [9] | 26.8% [9] | Outperforms traditional ETL (17.1% CAGR) |

| iPaaS Market | $12.87B [9] | $78.28B [9] | 25.9% [9] | 2-4x faster than overall IT spending growth |

Table 2: Implementation Challenges & Success Factors

| Challenge Area | Success Rate | Primary Barrier | Recommended Solution |

|---|---|---|---|

| Data Governance Initiatives | 20% success [9] | Organizational silos | Active metadata & automated quality |

| AI Adoption | 42% active use [9] | Integration complexity (95% cite) [9] | AI-ready data pipelines |

| Event-Driven Architecture | 13% maturity [9] | Implementation complexity | Phased adoption with CDC |

| Hybrid Cloud Integration | 61% SMB workloads [9] | Multi-cloud complexity | Cloud-native integration tools |

| Talent Gap | 87% face shortages [9] | Specialized skills | Low-code platforms & training |

Experimental Protocols

Protocol 1: SHACL-Based Semantic Validation for Integrated Knowledge Graphs

Purpose: Detect and resolve semantic inconsistencies in integrated biomedical data.

Materials:

- RDF triplestore with SHACL support (e.g., RDFox) [13]

- Domain ontologies (e.g., DBpedia OWL, protein ontologies)

- SHACL shapes defining constraints

- SPARQL endpoint for query and update

Methodology:

- Knowledge Graph Construction

- Convert source data to RDF using domain ontologies

- Apply OWL reasoning to infer additional relationships [13]

- Store integrated graph in SHACL-enabled triplestore

Constraint Definition

- Define SHACL shapes for domain constraints

- Specify cardinality restrictions and value constraints

- Define disjoint class relationships

Validation Execution

Repair Implementation

- Analyze validation report for violations

- Execute SPARQL updates to resolve inconsistencies [13]

- Verify repair success with re-validation

Validation: Execute test queries to verify consistent results across previously conflicting domains.

Protocol 2: High-Throughput MS Data Migration to Optimized Format

Purpose: Migrate large-scale mass spectrometry data to efficient format while preserving metadata.

Materials:

- Source data (mzML, vendor-specific formats)

- mzPeak conversion tools [14]

- High-performance computing environment

- Validation datasets (PRIDE archive samples) [17]

Methodology:

- Baseline Assessment

- Measure source file sizes and access times

- Profile metadata completeness using PSI-MS CV [14]

- Establish performance benchmarks

Format Migration

Performance Validation

- Compare file sizes and compression ratios

- Measure data access times for common operations

- Verify analytical equivalence with original data

Interoperability Testing

- Export to multiple downstream formats

- Verify compatibility with analysis tools

- Test cross-platform data exchange

Quality Control: Use ProteomeXchange validation suite to ensure compliance with community standards [17].

Workflow Visualizations

Semantic Validation and Repair Workflow

MS Data Migration to Optimized Format

The Scientist's Toolkit

Table 3: Essential Research Reagents & Computational Tools

| Tool/Standard | Function | Application Context | Implementation Consideration |

|---|---|---|---|

| SHACL (Shapes Constraint Language) | Data validation against defined constraints [13] | Knowledge graph quality assurance | Requires SHACL-processor; integrates with SPARQL |

| mzPeak Format | Next-generation MS data storage [14] | High-throughput proteomics/ metabolomics | Hybrid binary + metadata structure; backward compatibility needed |

| ProteomeXchange Consortium | Standardized data submission framework [17] | Proteomics data sharing & reproducibility | Mandates mzML/mzIdentML formats; provides PXD identifiers |

| RDFox with SHACL | In-memory triple store with validation [13] | Semantic integration with quality checks | High-performance; enables automated repair operations |

| OpenLAB CDS | Vendor-neutral chromatography data system [16] | Instrument control & data management | Supports multi-vendor equipment; simplifies training |

| Change Data Capture (CDC) | Real-time data change propagation [18] | Streaming analytics & live dashboards | Multiple types: log-based, trigger-based, timestamp-based |

| ELT (Extract-Load-Transform) | Modern data integration pattern [18] [19] | Cloud data warehousing & big data | Leverages target system processing power; preserves raw data |

| PCS1055 | PCS1055, CAS:357173-55-8, MF:C27H32N4, MW:412.6 g/mol | Chemical Reagent | Bench Chemicals |

| MeSeI | MeSeI, MF:C21H17NSe, MW:362.3 g/mol | Chemical Reagent | Bench Chemicals |

Quantifying the Crisis: Data and Trends

The digital transformation of research has created a critical shortage of professionals who possess both technical data skills and scientific domain knowledge.

The Market Growth and Skills Demand

Table: Data Integration Market Growth and Talent Impact

| Metric | 2024/Current Value | 2030/Projected Value | CAGR/Growth Rate | Talent Implication |

|---|---|---|---|---|

| Data Integration Market [9] | $15.18 billion | $30.27 billion | 12.1% | High demand for integration specialists |

| Streaming Analytics Market [9] | $23.4 billion (2023) | $128.4 billion | 28.3% | Critical need for real-time data engineers |

| iPaaS Market [9] | 25.9% | Growth of low-code/no-code platforms | ||

| AI/ML VC Funding [9] | $100 billion (2024) | 80% YoY increase | Intense competition for AI talent | |

| Applications Integrated [20] | 29% (average enterprise) | 71% of enterprise apps remain unintegrated [20] |

The Pharma and Research Skills Gap

Table: Skills Gap Impact on Pharma and Research Sectors

| Challenge | Statistical Evidence | Impact on Research |

|---|---|---|

| Digital Transformation Hindrance | 49% of pharma professionals cite skills shortage as top barrier [21] | Slows adoption of AI in drug discovery and clinical trials [21] |

| AI/ML Adoption Barrier | 44% of life-science R&D orgs cite lack of skills [21] | Limits in-silico experiments and predictive modeling [21] |

| Cross-Disciplinary Talent Shortage | 70% of hiring managers struggle to find candidates with both pharma and AI skills [21] | Creates communication gaps between data scientists and biologists [21] |

| Developer Productivity Drain | 39% of developer time spent on custom integrations [20] | Diverts resources from core research algorithm development |

Core Challenges and Troubleshooting Guides

Challenge: Data Silos in Hybrid Research Environments

The Problem: Research data becomes trapped in disparate systems—on-premise high-performance computing clusters, cloud-based analysis tools, and proprietary instrument software [22].

Troubleshooting Guide:

Q: How can I identify if my team is suffering from data silos?

- A: Look for these symptoms: (1) Inability to correlate genomic data with clinical outcomes without manual CSV exports; (2) Multiple versions of the same dataset across different lab servers; (3) Researchers spending >30% of time on data wrangling instead of analysis [22].

Q: What is the first step to break down data silos?

- A: Implement a lightweight data virtualization layer. Tools like Denodo can provide a unified view of data across sources without moving it, creating a single source of truth for your research team [22].

Experimental Protocol: Implementing a Research Data Mesh

- Objective: To establish a federated data ownership model that scales with complex, multi-disciplinary research projects.

- Methodology:

- Domain Identification: Map data to research domains (e.g., Genomics, Proteomics, Clinical Data) and assign domain-specific data owners.

- Data Product Design: Treat each dataset as a "data product" with clear ownership, quality standards, and discoverability.

- Self-Serve Infrastructure: Provide a central platform (e.g., Kubernetes-based) for domain teams to publish and consume data products.

- Federated Governance: Establish cross-domain standards for metadata, security, and compliance [22].

Challenge: Integration of AI/ML Workloads

The Problem: Traditional IT systems cannot handle the iterative, data-intensive nature of machine learning models, leading to model drift and inconsistent predictions in production research environments [22].

Troubleshooting Guide:

Q: My ML model performs well in development but fails in production. Why?

- A: This is typically caused by model drift, where the statistical properties of production data change over time. Implement continuous monitoring for data drift (changes in input data distribution) and concept drift (changes in the relationship between input and output data) [22].

Q: How can I ensure reproducibility in my machine learning experiments?

- A: Adopt MLOps platforms like MLflow or Kubeflow to automate the end-to-end ML lifecycle. This includes versioning datasets, model hyperparameters, and code to create reproducible workflows [22].

Experimental Protocol: MLOps for Research Validation

- Objective: To create a reproducible, continuous retraining pipeline for predictive models in drug discovery.

- Methodology:

- Containerization: Package model training and inference code using Docker for consistent environments.

- Orchestration: Use Kubernetes to manage containerized workloads and services.

- Automated Retraining: Set up trigger-based (e.g., time, data drift) retraining pipelines using Kubeflow Pipelines.

- Model Serving: Deploy models as RESTful APIs using TensorFlow Serving or TorchServe for integration with other research applications [22].

MLOps Model Lifecycle Management

Challenge: Real-Time Data Integration at Scale

The Problem: Batch-based data processing creates latency in high-throughput informatics, delaying critical insights from streaming data sources like genomic sequencers and real-time patient monitoring systems [9] [22].

Troubleshooting Guide:

Q: My data pipelines cannot handle the volume from our new sequencer. What architecture should I use?

- A: Transition from batch to Event-Driven Architecture (EDA). Platforms like Apache Kafka can process and route high-volume event streams in real time, enabling services to react instantly to new data events [22].

Q: How can I reduce network load when processing IoT data from lab equipment?

- A: Implement edge pre-processing. Use tools like AWS Greengrass or Azure IoT Edge to filter, enrich, or aggregate data at the edge—near the source—before transmission to central systems [22].

The Scientist's Toolkit: Research Reagent Solutions

Table: Essential "Reagents" for Modern Research Data Infrastructure

| Solution Category | Example Tools/Platforms | Function in Research Context |

|---|---|---|

| iPaaS (Integration Platform as a Service) | MuleSoft, Boomi, ONEiO [20] | Pre-built connectors to orchestrate data flows between legacy systems (e.g., LIMS) and modern cloud applications without extensive coding. |

| Event Streaming Platforms | Apache Kafka, Apache Pulsar [22] | Central nervous system for real-time data; ingests and processes high-volume streams from instruments and sensors for immediate analysis. |

| API Management | Kong, Apigee, AWS API Gateway [22] | Standardizes and secures how different research applications and microservices communicate, preventing "API sprawl." |

| MLOps Platforms | MLflow, Kubeflow, SageMaker Pipelines [22] | Provides reproducibility and automation for the machine learning lifecycle, from experiment tracking to model deployment and monitoring. |

| Data Virtualization | Denodo, TIBCO Data Virtualization [22] | Allows querying of data across multiple, disparate sources (e.g., EHR, genomic databases) in real-time without physical movement, creating a unified view. |

| No-Code Integration Tools | ZigiOps [22] | Enables biostatisticians and researchers to build and manage integrations between systems like Jira and electronic lab notebooks without deep coding knowledge. |

| LY3056480 | LY3056480, CAS:2064292-78-8, MF:C23H28F3N3O4, MW:467.5 g/mol | Chemical Reagent |

| Sob-AM2 | Sob-AM2, MF:C21H27NO3, MW:341.4 g/mol | Chemical Reagent |

Strategic Solutions and Implementation Framework

Solution: Upskilling and Cross-Training Strategies

Table: Comparative Analysis of Talent Development Strategies

| Strategy | Implementation Protocol | Effectiveness Metrics | Case Study / Evidence |

|---|---|---|---|

| Reskilling Existing Staff | - Identify staff with aptitudes for data thinking- Partner with online learning platforms- Provide 20% time for data projects | - 25% boost in retention [21]- 15% efficiency gains [21]- Half the cost of new hiring [21] | Johnson & Johnson trained 56,000 employees in AI skills [21] |

| Creating "AI Translator" Roles | - Recruit professionals with hybrid backgrounds- Develop clear career pathways- Position as bridge between IT and research | - Improved project success rates- Reduced miscommunication- Faster implementation cycles | Bayer partnered with IMD to upskill 12,000 managers, achieving 83% completion [21] |

| Partnering with Specialized Firms | - Outsource specific data functions via FSP model [23]- Maintain core strategic oversight internally | - Access to specialized skills without long-term overhead- Faster project initiation- Knowledge transfer to internal teams | 48% of SMBs partner with MSPs for cloud management (up from 36%) [9] |

Integrated Talent Strategy Framework

Solution: Technology Implementation and Architecture

Troubleshooting Guide:

Q: We are overwhelmed by API sprawl. How can we manage integration complexity?

- A: Deploy an API Gateway (e.g., Kong, Apigee) for centralized management. This standardizes API access, implements consistent authentication, and manages traffic routing from a single control plane [22].

Q: What is the most critical first step in modernizing our research data infrastructure?

- A: Conduct an Application Portfolio Audit. Research shows the average enterprise uses 897 applications, with only 29% integrated. Identify which systems are critical for your research workflows and prioritize their integration [20].

Experimental Protocol: Implementing Integration Operations (IntOps)

- Objective: To transition integration from a project-based task to a continuous operational capability.

- Methodology:

- Centralized Team: Establish a central Integration Center of Excellence (CoE) with embedded specialists in research units.

- Standardization: Enforce enterprise-wide data standards, schema policies, and API specifications.

- Automation: Implement CI/CD pipelines for integration testing and deployment.

- Monitoring: Use data observability tools to proactively monitor data health across pipelines [20].

Troubleshooting Guides

Guide 1: Resolving Multi-Omic Data Integration Challenges

Problem: Researchers encounter failures when integrating disparate omics datasets (genomics, transcriptomics, proteomics, metabolomics) from multiple sources, leading to incomplete or inaccurate unified biological profiles.

Solution: A systematic approach to identify and resolve data integration issues.

Identify the Root Cause

- Scrutinize Error Messages: Check logs for specific clues about format mismatches, connection timeouts, or schema violations [24].

- Profile Data Sources: Examine individual omics data files for inconsistencies in identifiers, missing values, duplicate entries, or incompatible data formats [24].

- Check Schema & Structure: Verify that the structure (e.g., column headers, data types) is consistent across all source files [24].

Verify Connections and Data Flow

- Confirm API Endpoints: Ensure all database connection strings, API endpoints, and authentication tokens (e.g., for EHR systems or data repositories) are valid and active [24].

- Assess Network Stability: Check for network latency or firewall settings that might interrupt large data transfers [24].

- Use Monitoring Tools: Implement logging and monitoring tools to track data flow and pinpoint the exact stage where failures occur [24].

Ensure Data Quality and Cleaning

- Clean Source Data: Address duplicate records, incorrect values, and missing entries before integration [24].

- Implement Validation Checks: Use data quality tools to perform automated profiling, validation, and cleansing [24].

- Standardize Formats: Enforce consistent formats for critical fields (e.g., date/time, gene identifiers, patient IDs) across all datasets [25].

Update and Validate Systems

- Update Software: Ensure all data integration tools, databases, and related software are updated to the latest versions to resolve known bugs and compatibility issues [24].

- Test Thoroughly: After making changes, run tests with a small, validated dataset before scaling up to full production volumes [24].

Guide 2: Addressing EHR Data Limitations for Research

Problem: Extracted EHR data is inconsistent, contains missing fields, or lacks the structured format required for robust integration with experimental omics data.

Solution: A protocol to enhance the quality and usability of EHR-derived data.

- Define Data Requirements: Clearly specify the essential variables (phenotypes, lab values, medications) needed for your study to guide the extraction process.

- Implement Pre-Processing Routines:

- Handle Missing Data: Develop rules for imputing or flagging missing data.

- Normalize Terminology: Map local EHR codes to standard terminologies (e.g., SNOMED CT, LOINC) to ensure consistency.

- De-identify Data: Remove or encrypt protected health information (PHI) in compliance with regulations like HIPAA [25].

- Create a Validation Layer: Use scripts or tools to check processed EHR data against predefined quality rules (e.g., value ranges, logical checks) before integration.

- Maintain Audit Trails: Keep detailed logs of all extraction and transformation steps for data lineage and reproducibility [25].

Frequently Asked Questions (FAQs)

Q1: What is the recommended sampling frequency for different omics layers in a longitudinal study? The optimal frequency varies by omics layer due to differing biological stability and dynamics [26]. A general hierarchy and suggested sampling frequency is provided in the table below.

| Omics Layer | Key Characteristics | Recommended Sampling Frequency & Notes |

|---|---|---|

| Genomics | Static snapshot of DNA; foundational profile [26]. | Single time point (unless studying somatic mutations) [26]. |

| Transcriptomics | Highly dynamic; sensitive to environment, treatment, and circadian rhythms [26]. | High frequency (e.g., hours/days); most responsive layer for monitoring immediate changes [26]. |

| Proteomics | More stable than RNA; reflects functional state of cells [26]. | Moderate frequency (e.g., weeks/months); proteins have longer half-lives [26]. |

| Metabolomics | Highly sensitive and variable; provides a real-time functional readout [26]. | High to moderate frequency (e.g., days/weeks); captures immediate metabolic shifts [26]. |

Q2: Our data integration pipeline fails intermittently. How can we improve its reliability? Implement high-availability features and robust error handling [27] [25].

- Automate Restart and Failover: Configure your integration service to automatically restart or failover to another node in case of process failure [27].

- Enable Recovery: Use tools that can automatically recover canceled workflow instances after an unexpected shutdown [27].

- Orchestrate with Tools: Use orchestration tools like Apache Airflow or AWS Step Functions to schedule, monitor, and manage workflows with built-in retry logic and failure notifications [25].

Q3: How can we ensure regulatory compliance (e.g., HIPAA, GDPR) when integrating sensitive patient multi-omics and EHR data?

- Implement Data Governance: Use platforms with built-in governance features, including data lineage tracking, cataloging, and fine-grained, role-based access control (RBAC) [25].

- Encrypt Data: Apply encryption for both data-at-rest and data-in-transit using enterprise-grade key management services [25].

- De-identify and Mask: Use data masking and tokenization techniques to protect sensitive personally identifiable information (PII) and PHI [25].

Q4: We are dealing with huge, high-dimensional multi-omics datasets. What architectural approach is most scalable? Adopt a modern cloud-native ELT (Extract, Load, Transform) approach [25].

- Extract and Load: First, move raw data into a scalable cloud data warehouse (e.g., Snowflake, BigQuery).

- Transform: Then, perform transformations inside the warehouse using its elastic compute power. This is faster and more cost-effective than traditional ETL for large datasets [25].

- Use Serverless Services: Leverage serverless and auto-scaling services (e.g., AWS Lambda, Kafka) to handle data volume spikes without manual intervention [25].

Experimental Protocols & Data Summaries

Table 1: Multi-Omics Data Integration Experimental Framework

| Experimental Phase | Core Objective | Key Methodologies & Technologies | Primary Outputs |

|---|---|---|---|

| 1. Data Sourcing & Profiling | Acquire and assess quality of raw data from diverse omics assays and EHRs. | High-throughput sequencing (NGS), Mass Spectrometry, EHR API queries, Data profiling tools. | Raw sequencing files (FASTQ), Spectral data, De-identified patient records, Data quality reports. |

| 2. Data Preprocessing & Harmonization | Clean, normalize, and align disparate datasets to a common reference. | Bioinformatic pipelines (e.g., Trimmomatic, MaxQuant), Schema mapping, Terminology standardization (e.g., LOINC). | Processed count tables, Normalized abundance matrices, Harmonized clinical data tables. |

| 3. Integrated Data Analysis | Derive biologically and clinically meaningful insights from unified data. | Multi-omics statistical models (e.g., MOFA), AI/ML algorithms, Digital twin simulations, Pathway analysis (GSEA, KEGG). | Biomarker signatures, Disease subtyping models, Predictive models of treatment response, Mechanistic insights. |

| 4. Validation & Compliance | Ensure analytical robustness and adherence to regulatory standards. | N-of-1 validation studies, Independent cohort validation, Data lineage tracking (e.g., with AWS Glue Data Catalog), Audit logs. | Validated biomarkers, Peer-reviewed publications, Regulatory submission packages, Reproducible workflow documentation. |

Table 2: Data Quality Control Framework for Multi-Omic Integration

| Data Quality Dimension | Checkpoints for Genomics/Transcriptomics | Checkpoints for Proteomics/Metabolomics | Checkpoints for EHR Data |

|---|---|---|---|

| Completeness | > Read depth coverage; > Percentage of called genotypes. | > Abundance values for QC standards; > Missing value rate per sample. | > Presence of required fields (e.g., diagnosis, key lab values). |

| Consistency | > Consistent gene identifier format (e.g., ENSEMBL). | > Consistent sample run order and injection volume. | > Standardized coding (e.g., SNOMED CT for diagnoses). |

| Accuracy | > Concordance with known control samples; > Low batch effect. | > Mass accuracy; > Retention time stability. | > Plausibility of values (e.g., birth date vs. age). |

| Uniqueness | > Removal of PCR duplicate reads. | > Removal of redundant protein entries from database search. | > Deduplication of patient records. |

Visualization Diagrams

Diagram 1: Multi-Omic Data Integration Workflow

Diagram 2: High-Availability Data Integration Service Architecture

The Scientist's Toolkit

Research Reagent Solutions for Multi-Omic Studies

| Item | Function in Multi-Omic Integration |

|---|---|

| Reference Standards | Unlabeled or isotopically labeled synthetic peptides, metabolites, or RNA spikes used to calibrate instruments, normalize data across batches, and ensure quantitative accuracy in proteomic and metabolomic assays. |

| Bioinformatic Pipelines | Software suites (e.g., NGSCheckMate, MSstats, MetaPhlAn) for processing raw data from specific omics platforms, performing quality control, and generating standardized output files ready for integration. |

| Data Harmonization Tools | Applications and scripts that map diverse data types (e.g., gene IDs, clinical codes) to standardized ontologies (e.g., HUGO Gene Nomenclature, LOINC, SNOMED CT), enabling seamless data fusion. |

| Multi-Omic Analysis Platforms | Integrated software environments (e.g., MixOmics, OmicsONE) that provide statistical and machine learning models specifically designed for the joint analysis of multiple omics datasets. |

| Data Governance & Lineage Tools | Platforms (e.g., Talend, Informatica, AWS Glue Data Catalog) that track the origin, transformation, and usage of data throughout its lifecycle, which is critical for reproducibility and regulatory compliance [25]. |

| PH-002 | PH-002, MF:C27H33N5O4, MW:491.6 g/mol |

| DDO-2093 | DDO-2093, MF:C34H43Cl2NO3, MW:584.6 g/mol |

Architectural Frameworks and Integration Methodologies for Complex Biomedical Data

Core Concepts: Definitions and Fundamental Differences

What is Physical Data Integration?

Physical data integration, traditionally known as ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform), involves consolidating data from multiple source systems into a single, physical storage location such as a data warehouse [28]. This process physically moves and transforms data from its original sources to a centralized repository, creating a persistent unified dataset [29].

Key Methodology: The ETL process follows three distinct stages [28] [3]:

- Extraction: Data is collected from diverse source systems and applications

- Transformation: Data is cleansed, formatted, and standardized to ensure consistency and compatibility

- Loading: Transformed data is loaded into a centralized target system like a data warehouse

What is Virtual Data Integration?

Data virtualization creates an abstraction layer that provides a unified, real-time view of data from multiple disparate sources without physically moving or replicating the data [28] [29]. This approach uses advanced data abstraction techniques to integrate and present data as if it were in a single database while the data remains in its original locations [28].

Key Methodology: The virtualization process operates through [28] [29]:

- A virtual data layer that connects to various source systems

- Real-time query capabilities across distributed data sources

- Caching mechanisms to optimize performance

- Standard query interfaces for user access

Comparative Framework: Architectural Differences

Technical Comparison Table

| Characteristic | Physical Data Integration | Virtual Data Integration |

|---|---|---|

| Data Movement | Physical extraction and loading into target system [28] | No physical movement; data remains in source systems [28] |

| Data Latency | Batch-oriented processing; potential delays in data updates [5] | Real-time or near real-time access to current data [29] |

| Implementation Time | Longer development cycles due to complex ETL processes [28] | Shorter development cycles with easier modifications [28] |

| Infrastructure Cost | Higher storage costs due to data duplication [3] | Lower storage requirements as data isn't replicated [29] |

| Data Governance | Centralized control in the data warehouse [5] | Distributed governance; security managed at source [28] |

| Performance | Optimized for complex queries and historical analysis [28] | Dependent on network latency and source system performance [29] |

| Scalability | Vertical scaling of centralized repository required [3] | Horizontal scaling through additional source connections [29] |

Performance Benchmarking Table

| Metric | Physical Integration | Virtual Integration |

|---|---|---|

| Data Processing Volume | Handles massive data volumes efficiently [3] | Best for moderate volumes with real-time needs [29] |

| Query Complexity | Excellent for complex joins and aggregations [28] | Limited by distributed query optimization challenges [29] |

| Historical Analysis | Ideal for longitudinal studies and trend analysis [28] [29] | Limited historical context without data consolidation [29] |

| Real-time Analytics | Limited by batch processing schedules [5] | Superior for operational decision support [29] |

| System Maintenance | Requires dedicated ETL pipeline maintenance [3] | Minimal maintenance of abstraction layer [28] |

Troubleshooting Guide: Common Technical Challenges

FAQ: Performance and Scalability Issues

Q: Our virtual data queries are experiencing slow performance with large datasets. What optimization strategies can we implement?

A: Implement caching mechanisms for frequently accessed data to reduce repeated queries to source systems [28]. Use query optimization techniques to minimize data transfer across networks, and consider creating summary tables for complex analytical queries. For large-scale analytical workloads, complement virtualization with targeted physical integration for historical data [29].

Q: We're facing data quality inconsistencies across integrated sources. How can we establish reliable data governance?

A: Implement robust data governance frameworks with defined data stewards who guide strategy and enforce policies [3]. Establish clear data quality metrics and validation rules applied at both source systems and during integration processes. Utilize data profiling tools to identify inconsistencies early in the integration pipeline [3].

Q: Our ETL processes are consuming excessive time and resources. What approaches can improve efficiency?

A: Implement incremental loading strategies rather than full refreshes to process only changed data [3]. Consider modern ELT approaches that leverage the processing power of target databases for transformation [28]. Utilize parallel processing capabilities and optimize transformation logic to reduce processing overhead [3].

FAQ: Implementation and Technical Challenges

Q: How do we handle heterogeneous data structures and formats across source systems?

A: Utilize ETL tools with robust transformation capabilities to manage different data formats and structures [3]. Implement standardized data models and mapping techniques to create consistency across disparate sources. For virtualization, ensure the platform supports multiple data formats and provides flexible mapping options [28].

Q: What security measures are critical for each integration approach?

A: For physical integration, implement encryption for data in transit and at rest, along with role-based access controls for the data warehouse [5]. For virtualization, leverage security protocols at source systems and implement comprehensive access management in the virtualization layer [28]. Both approaches benefit from data masking techniques for sensitive information [5].

Q: How can we manage unforeseen costs in data integration projects?

A: Implement contingency planning with dedicated budgets for unexpected challenges [3]. Conduct thorough source system analysis before implementation to identify potential complexity. Establish regular monitoring of integration processes to identify issues early before they become costly problems [3].

Experimental Protocol: Implementation Methodology

Workflow for Comparative Analysis

Performance Testing Methodology

Test Data Preparation

- Create representative datasets of varying sizes (1GB, 10GB, 100GB)

- Include structured, semi-structured, and unstructured data formats

- Implement data quality benchmarks for accuracy measurement

Query Performance Assessment

- Execute standardized query sets against both implementations

- Measure response times for simple lookups, complex joins, and aggregations

- Test concurrent user access with increasing load (10, 50, 100 concurrent users)

Data Freshness Evaluation

- Measure time from source data modification to availability for querying

- Test both scheduled updates and real-time synchronization approaches

- Assess impact on source system performance during data access

Research Reagent Solutions: Essential Tools and Platforms

| Tool Category | Representative Solutions | Primary Function |

|---|---|---|

| Physical Data Integration Platforms | CData Sync [28], Workato [5] | ETL/ELT processes, data warehouse population, batch processing |

| Data Virtualization Platforms | CData Connect Cloud [28], Denodo [29] | Real-time data abstraction, federated query processing, virtual data layers |

| Hybrid Integration Solutions | FactoryThread [29] | Combines physical and virtual approaches, legacy system modernization |

| Data Quality & Governance | Custom profiling tools, data mapping applications [3] | Data validation, quality monitoring, metadata management |

| Cloud Data Warehouses | Snowflake [29] | Scalable storage for physically integrated data, analytical processing |

Strategic Implementation Guidelines

Decision Framework: Selection Criteria

Choose Physical Data Integration When:

- Performing large-scale historical analysis and data mining [28] [29]

- Establishing a single source of truth for enterprise reporting [5]

- Working with complex data transformations requiring high processing power [28]

- Compliance requirements mandate centralized data storage and audit trails [5]

Choose Virtual Data Integration When:

- Real-time or near real-time data access is critical for operations [29]

- Integrating data from systems where physical extraction is challenging [28]

- Rapid prototyping and agile development methodologies are prioritized [28]

- Source data changes frequently and requires immediate availability [29]

Hybrid Approach Implementation

For large-scale informatics infrastructures, a hybrid approach often delivers optimal results by leveraging the strengths of both methodologies [28] [29]:

- Foundation Layer: Use physical integration to create a historical data repository for longitudinal studies

- Operational Layer: Implement virtualization for real-time access to current operational data

- Orchestration: Deploy middleware to coordinate between physical and virtual layers

- Governance Framework: Establish unified data governance across both approaches

This hybrid model supports both deep historical analysis through physically integrated data and agile operational decision-making through virtualized access, effectively addressing the diverse requirements of high-throughput informatics research environments.

Frequently Asked Questions (FAQs)

Q1: What is BERT and what specific data integration problem does it solve? BERT (Batch-Effect Reduction Trees) is a high-performance computational method designed for integrating large-scale omic datasets afflicted with technical biases (batch effects) and extensive missing values. It specifically addresses the challenge of combining independently acquired datasets from technologies like proteomics, transcriptomics, and metabolomics, where incomplete data profiles and measurement-specific biases traditionally hinder robust quantitative comparisons [30].

Q2: How does BERT improve upon existing methods like HarmonizR? BERT offers significant advancements over existing tools, primarily through its tree-based integration framework. The table below summarizes its key improvements.

Table: Performance Comparison of BERT vs. HarmonizR

| Performance Metric | BERT | HarmonizR (Full Dissection) | HarmonizR (Blocking of 4) |

|---|---|---|---|

| Data Retention | Retains all numeric values [30] | Up to 27% data loss [30] | Up to 88% data loss [30] |

| Runtime Improvement | Up to 11x faster [30] | Baseline | Varies with blocking strategy [30] |

| ASW Score Improvement | Up to 2x improvement [30] | Not specified | Not specified |

Q3: What are the mandatory input data requirements for BERT?

Your input data must be structured as a dataframe (or SummarizedExperiment) with samples in rows and features in columns. A mandatory "Batch" column must indicate the batch origin for each sample using an integer or string. Missing values must be labeled as NA. Crucially, each batch must contain at least two samples [31].

Q4: Can BERT handle datasets with known biological conditions or covariates?

Yes. BERT allows you to specify categorical covariates (e.g., "healthy" vs. "diseased") using additional columns prefixed with Cov_. The algorithm uses this information to preserve biological variance while removing technical batch effects. For each feature, BERT requires at least two numeric values per batch and unique covariate level to perform the adjustment [31].

Q5: What should I do if my dataset has batches with unique biological classes?

For datasets where some batches contain unique classes or samples with unknown classes, BERT provides a "Reference" column. You can designate samples with known classes as references (encoded with an integer or string), and samples with unknown classes with 0. BERT will use the references to learn the batch-effect transformation and then co-adjust the non-reference samples. This column is mutually exclusive with covariate columns [31].

Troubleshooting Guides

Issue 1: Installation and Dependency Errors

Problem: Errors occur during the installation of the BERT package.

Solution:

- Recommended Installation: Install BERT via Bioconductor, which automatically handles dependencies.

- Manual Dependency Installation: If needed, manually install key dependencies:

- Development Version: For the latest development version, use

devtools. Ensure your system has necessary build tools [32].

Issue 2: Input Data Formatting and Validation Errors

Problem: The BERT function fails with errors related to input data format.

Solution:

- Verify Structure: Ensure your data is a dataframe with features as columns and a dedicated "Batch" column.

- Check Batch Size: Confirm every batch has at least two samples.

- Inspect Missing Values: All missing values must be

NA. - Validate Covariates/References: If used, ensure no

NAvalues are present inCov_*orReferencecolumns. TheReferencecolumn cannot be used simultaneously with covariate columns [31]. - Use Built-in Verification: The

BERT()function runs internal checks by default (verify=TRUE). Heed any warnings or error messages it provides.

Issue 3: Poor Batch-Effect Correction Results

Problem: After running BERT, the batch effects are not sufficiently reduced.

Solution:

- Check Quality Metrics: BERT automatically reports Average Silhouette Width (ASW) scores for batch and label. A successful correction is indicated by a low ASW Batch score (≤ 0 is desirable) and a high or preserved ASW Label score [31].

- Review Covariate Specification: Incorrectly specified covariates can lead to biological signal being incorrectly removed. Double-check the assignments in your

Cov_*columns. - Leverage References: If your dataset has severe design imbalances, use the

Referencecolumn to guide the correction process more effectively [30]. - Switch Adjustment Method: BERT defaults to the "ComBat" method. You can try the "limma" method, which may offer better performance in some scenarios [30].

Issue 4: Long Execution Times on Large Datasets

Problem: The data integration process is taking too long.

Solution:

- Enable Parallelization: Use the

coresparameter to leverage multi-core processors. A value between 2 and 4 is recommended for typical hardware. - Optimize Parallelization Parameters: Adjust the

corereductionandstopParBatchesparameters for finer control over the parallelization workflow in large-scale analyses [31].

Experimental Protocols

Protocol 1: Benchmarking BERT Against HarmonizR Using Simulated Data

This protocol outlines the steps to reproduce the performance comparison between BERT and HarmonizR as described in the Nature Communications paper [30].

1. Data Simulation:

- Generate a complete data matrix with 6000 features and 20 batches, each containing 10 samples.

- Introduce two simulated biological conditions.

- Randomly set up to 50% of values as missing completely at random (MCAR) to create an incomplete omic profile.

2. Data Integration Execution:

- BERT: Apply the BERT algorithm to the simulated dataset using default parameters.

- HarmonizR: Process the same dataset using HarmonizR with different strategies: full dissection and blocking of 2 or 4 batches.

3. Performance Metric Calculation:

- Data Retention: Calculate the percentage of numeric values retained from the original dataset after each method's pre-processing.

- Runtime: Measure the sequential execution time for both methods.

- Correction Quality: Compute the Average Silhouette Width (ASW) with respect to both the batch of origin (ASW Batch) and the biological condition (ASW Label).

Protocol 2: Basic BERT Workflow for a Standard Proteomics Dataset

1. Data Preparation:

- Format your protein abundance matrix into a dataframe. Ensure a "Batch" column exists and missing values are

NA. - (Optional) Add a "Label" column for biological conditions and/or "Cov_" columns for covariates.

2. Package Installation and Loading:

3. Execute Batch-Effect Correction: Run BERT with basic parameters. The output will be a corrected dataframe mirroring the input structure.

4. Evaluate Results:

- Inspect the ASW Batch and ASW Label scores printed to the console.

- Proceed with downstream biological analysis using the corrected data.

Core Algorithm Workflow and Data Flow

The following diagram illustrates the hierarchical tree structure and data flow of the BERT algorithm, which decomposes the integration task into pairwise correction steps.

Research Reagent Solutions

Table: Essential Components for a BERT-Based Analysis

| Item | Function/Description | Key Consideration |

|---|---|---|

| Input Data Matrix | A dataframe or SummarizedExperiment object containing the raw, uncorrected feature measurements (e.g., protein abundances). |

Must include a mandatory "Batch" column. Samples in rows, features in columns. |

| Batch Information | A column in the dataset assigning each sample to its batch of origin. | Critical for the algorithm. Each batch must have ≥2 samples. |

| Covariate Columns | Optional columns specifying biological conditions (e.g., disease state, treatment) to be preserved during correction. | Mutually exclusive with the Reference column. No NA values allowed. |

| Reference Column | Optional column specifying samples with known classes to guide correction when covariate distribution is imbalanced. | Mutually exclusive with covariate columns. |

| BERT R Package | The core software library implementing the algorithm. | Available via Bioconductor for easy installation and dependency management [31]. |

| High-Performance Computing (HPC) Resources | Multi-core processors or compute clusters. | Not mandatory but significantly speeds up large-scale integration via the cores parameter [30]. |

Frequently Asked Questions (FAQs)

General Standards & Interoperability

Q: What is the fundamental difference between HL7 v2 and FHIR?

A: HL7 v2 and FHIR represent different generations of healthcare data exchange standards. HL7 v2, developed in the 1980s, uses a pipe-delimited messaging format and is widely used for internal hospital system integration, but it has a steep learning curve and limited support for modern technologies like mobile apps [33]. FHIR (Fast Healthcare Interoperability Resources), released in 2014, uses modern web standards like RESTful APIs, JSON, and XML. It is designed to be faster to learn and implement, more flexible, and better suited for patient-facing applications and real-time data access [33] [34].

Q: How does semantic harmonization address data integration challenges in research?

A: Semantic harmonization is the process of collating data from different institutions and formats into a singular, consistent logical view. It addresses core challenges in research by ensuring that data from multiple sources shares a common meaning, enabling researchers to ask single questions across combined datasets without modifying queries for each source. This is particularly vital for cohort data, which is often specific to a study's focus area [35].

Q: What are the common technical challenges when implementing FHIR APIs?

A: Common challenges include handling rate limits (which return HTTP status code 429), implementing efficient paging for large datasets, avoiding duplicate API calls, and ensuring proper data formatting when posting documents. It is also critical to use query parameters for filtering data on the server side rather than performing post-query filtering client-side to improve performance and reliability [36].

Implementation & Troubleshooting

Q: My FHIR API calls are being throttled. How should I handle this?

A: If you receive a 429 (Too Many Requests) status code, you should implement a progressive retry strategy [36]:

- Pause: Wait briefly before retrying.

- Retry with Exponential Backoff: Retry once after a short delay (e.g., one second). If unsuccessful, double the delay for each subsequent retry (e.g., two seconds, then four).

- Limit Retries: Set a maximum number of retry attempts (e.g., three) to avoid overwhelming the service.

Q: Why does my application not retrieve all patient data when using the FHIR API?

A: This is likely because your application does not handle paging. Queries on some FHIR resources can return large data sets split across multiple "pages." Your application must implement logic to navigate these pages using the _count parameter and the links provided in the Bundle resource response to retrieve the complete dataset [36].

Q: What are the common issues when posting clinical documents via the DocumentReference resource?

A: When posting clinical notes, ensure your application follows these guidelines [36]:

- Format: Use XHTML-formatted documents, as only XHTML and HTML5 are supported. Tags like

<br>must be self-closed (<br />). - Sanitization: Be aware that tags like

script,style, andiframeare removed during processing. - Images: Do not use external image links. All images must be embedded as Base64-encoded files.

- Testing: Validate your XHTML using an XHTML 1.0 strict validator before posting.

Q: My queries for specific LOINC codes are not returning data from all hospital sites. Why?

A: This is a common mapping issue. Different hospitals may use proprietary codes that map to different, more specific LOINC codes. For example, a test for "lead" might map to the general code 5671-3 at one hospital and the more specific 77307-7 at another [36]. To resolve this:

- Broaden your query to include all possible LOINC codes that represent the same clinical concept.

- Work with the EHR vendor or site to understand the specific code mappings used at each installation.

Troubleshooting Guides

Guide 1: Resolving Data Mapping and Terminology Issues

Problem: Queries using standardized codes (like LOINC or SNOMED CT) fail to return consistent results across different data sources, a frequent issue in multi-center studies [36].

Investigation and Resolution:

- Identify the Discrepancy: Confirm that the same clinical concept is being queried across all systems.

- Profile the Source Data: Examine the source systems to understand the proprietary codes and their mappings to standard terminologies. This is part of the "Data Discovery and Profiling" step in semantic harmonization [37].

- Expand Value Sets: Do not rely on a single code. Broaden your query to include all relevant codes from the standard terminology that represent the same concept. Utilize value sets—subsets of terminology for a particular function—which are central to FHIR's approach to semantic interoperability [38].

- Leverage a Common Data Model (CDM): For complex research projects, define or adopt a CDM. This serves as a universal schema. The mapping of local codes to the standardized concepts in the CDM ensures semantic consistency across all data sources [37].

Guide 2: Debugging FHIR API Performance and Integration

Problem: FHIR API interactions are slow, return incomplete data, or fail with errors, hindering data retrieval for analysis.

Investigation and Resolution:

- Check for Rate Limiting: Monitor for HTTP 429 status codes. Implement the exponential backoff retry strategy as described in the FAQs [36].

- Verify Paging Implementation: Ensure your application correctly handles paged results. Test with a patient known to have a large dataset and use the

_countparameter to force paging on smaller result sets [36]. - Audit API Call Efficiency: Review your application's code to eliminate duplicate API calls. A single, well-constructed query is more efficient than multiple calls with client-side filtering [36].

- Validate Query Parameters: Use the query parameters defined by the FHIR API specification instead of fetching large datasets and filtering them in your application. This shifts the filtering workload to the server, which is optimized for this task [36].

- Inspect Resource Content: For posting data, meticulously validate the structure and content of your FHIR resources against the FHIR specification and any applicable implementation guides (e.g., US Core Profiles) [38].

Experimental Protocols & Methodologies