A Practical Framework for Validating Analytical Methods for Inorganic Compounds

This article provides a comprehensive guide to analytical method validation for inorganic compounds, tailored for researchers, scientists, and drug development professionals.

A Practical Framework for Validating Analytical Methods for Inorganic Compounds

Abstract

This article provides a comprehensive guide to analytical method validation for inorganic compounds, tailored for researchers, scientists, and drug development professionals. It covers foundational principles, from defining key performance parameters like accuracy, precision, and specificity according to ICH Q2(R1) and USP guidelines. The scope extends to advanced methodological applications using techniques like ICP-MS and IC, troubleshooting for emerging contaminants and matrix effects, and a comparative review of validation strategies to ensure regulatory compliance and data integrity across pharmaceutical, environmental, and material science fields.

Core Principles and Regulatory Requirements for Inorganic Analysis

Defining Analytical Method Validation in a Regulated Environment

In the highly regulated pharmaceutical industry, analytical method validation is a formal, systematic process that proves the reliability and suitability of every test used to examine drug substances and products [1]. It provides documented evidence that an analytical procedure is fit for its intended purpose, ensuring the identity, potency, quality, purity, and consistency of pharmaceutical compounds [2] [3]. Regulatory authorities worldwide mandate validation to formally demonstrate that an assay method provides dependable, consistent data to ensure product safety and efficacy [1] [4].

For researchers working with organic compounds, method validation transforms a laboratory procedure into a trusted scientific tool capable of generating defensible data for regulatory submissions. The process establishes, through laboratory studies, that the performance characteristics of the method meet requirements for the intended analytical application [2]. In organic chemistry research, this is particularly crucial for quantifying active pharmaceutical ingredients (APIs), identifying impurities, and ensuring batch-to-batch consistency throughout the drug development lifecycle.

Core Principles and Regulatory Framework

The International Council for Harmonisation (ICH) Guidelines

The ICH guidelines provide the primary international framework for analytical method validation, with ICH Q2(R2) representing the current standard [3]. This guideline harmonizes requirements across regulatory bodies including the FDA (Food and Drug Administration) and EMA (European Medicines Agency), offering a standardized approach to validating analytical procedures [4]. The recent update from Q2(R1) to Q2(R2) expands the scope to include modern analytical technologies and provides more detailed guidance on performance characteristics [5] [4].

ICH Q14 complements Q2(R2) by introducing a structured approach to analytical procedure development, emphasizing science- and risk-based methodologies, prior knowledge utilization, and lifecycle management [3]. Together, these documents establish that validation must demonstrate a method can successfully measure the desired attribute of an organic compound without interference from the complex matrix in which it exists [6].

Key Validation Parameters and Their Significance

Analytical method validation requires testing multiple attributes to confirm the method provides useful and valid data when used routinely [6]. The specific parameters evaluated depend on the method's intended purpose, but core characteristics have been established through international consensus.

Table 1: Core Analytical Performance Characteristics and Their Definitions

| Performance Characteristic | Definition | Significance in Organic Compound Analysis |

|---|---|---|

| Specificity/Selectivity | Ability to measure the analyte accurately in the presence of other components [6] [1] | Confirms the method can distinguish and quantify the target organic compound from impurities, degradants, or matrix components [7] |

| Accuracy | Closeness of agreement between the value obtained by the method and the true value [6] [1] | Demonstrates the method yields results close to the true value for the organic compound, often shown through recovery studies [7] |

| Precision | Closeness of agreement among a series of measurements from multiple samplings [6] [1] | Quantifies the method's random variation, including repeatability and intermediate precision [7] |

| Linearity | Ability to produce test results directly proportional to analyte concentration [1] [7] | Establishes the method's proportional response across a defined range for quantification [6] |

| Range | Interval between upper and lower concentration levels with demonstrated precision, accuracy, and linearity [6] [1] | Defines the concentration boundaries where the method performs satisfactorily for the organic analyte [7] |

| Limit of Detection (LOD) | Lowest amount of analyte that can be detected [6] [1] | Important for impurity identification in organic compounds [1] |

| Limit of Quantitation (LOQ) | Lowest amount of analyte that can be quantified with acceptable accuracy and precision [6] [1] | Critical for quantifying low-level impurities or degradants in organic compounds [7] |

| Robustness | Capacity to remain unaffected by small, deliberate variations in method parameters [7] [3] | Measures method reliability under normal operational variations [3] |

Experimental Design and Protocols for Method Validation

Validation Experimental Workflow

The validation process follows a structured workflow from initial planning through protocol execution and data analysis. This systematic approach ensures all performance characteristics are thoroughly evaluated against predefined acceptance criteria.

Detailed Methodologies for Key Validation Experiments

Accuracy Determination Protocol

Purpose: To demonstrate that the method yields results close to the true value for the organic compound [7].

Experimental Design:

- Prepare a minimum of 3 concentration levels covering the reportable range (typically 50-150% of target concentration) with 3 replicates each [7]

- For drug products, spike known quantities of analyte into synthetic placebo matrix containing all components except the analyte [7]

- Process samples using the complete analytical procedure including sample preparation

- Compare measured values to theoretical concentrations or results from an established reference method [6]

Calculations:

- Calculate percent recovery for each spike level:

(Measured Concentration/Theoretical Concentration) × 100 - Determine overall mean recovery and confidence intervals

- Acceptance criteria typically require mean recovery of 98-102% for APIs with RSD ≤ 2% [3]

Precision Studies Protocol

Purpose: To quantify the method's random variation at multiple levels [7].

Repeatability (Intra-assay Precision):

- Analyze a minimum of 6 determinations at 100% test concentration or 3 concentrations × 3 replicates covering the reportable range [7]

- Perform under same operating conditions by same analyst over short time interval

- Calculate mean, standard deviation, and %RSD (relative standard deviation)

Intermediate Precision (Ruggedness):

- Incorporate variations: different days, different analysts, different equipment [7]

- Design studies to evaluate the impact of each factor on results

- Express results as standard deviation, RSD, and confidence intervals

Calculations:

%RSD = (Standard Deviation/Mean) × 100- Compare obtained RSD to predefined acceptance criteria (often ≤2% for assay methods) [3]

- The Horwitz equation may provide guidance:

%RSDr = 2^(1-0.5logC) × 0.67where C is concentration as a decimal fraction [6]

Linearity and Range Establishment Protocol

Purpose: To demonstrate the method produces results proportional to analyte concentration [7].

Experimental Design:

- Prepare a minimum of 5 concentration levels appropriately distributed across the working range [7]

- For assay methods, typically 50-150% of target concentration [6]

- Analyze each solution minimum twice and record instrument response [6]

- Plot concentration versus response and evaluate using appropriate statistical methods

Calculations:

- Calculate regression line using least squares method:

y = bx + a[6] - Determine correlation coefficient (r), slope (b), and y-intercept (a)

- Evaluate residual plots to confirm calibration model suitability [4]

- Acceptance typically requires correlation coefficient R² > 0.995-0.999 depending on application [7]

Specificity/Selectivity Validation Protocol

Purpose: To demonstrate the method can accurately measure the analyte in the presence of other components [1].

Experimental Design for Organic Compounds:

- Analyze chromatographic blanks to identify interference in expected retention window [6]

- Inject individual impurities, degradants, or matrix components to determine resolution

- Perform forced degradation studies (acid/base, oxidation, heat, light) to assess interference from degradants

- For chromatographic methods, ensure resolution factor ≥ 2.0 between critical pairs

Evaluation Criteria:

- Peak purity assessment using diode array or mass spectrometric detection

- Baseline separation between analyte and closest eluting potential interferent

- No interference at retention time of analyte from blank matrix

Comparative Analysis of Validation Requirements by Method Type

The validation parameters required depend on the analytical method's intended purpose. Regulatory guidelines define different requirements for identification tests, impurity procedures, and assay methods.

Table 2: Validation Requirements by Analytical Method Type (per ICH Guidelines)

| Validation Characteristic | Identification Tests | Testing for Impurities | Assay of Drug Substance/Product |

|---|---|---|---|

| Specificity/Selectivity | Yes [1] | Yes [1] | Yes [1] |

| Accuracy | Not required | Yes [1] | Yes [1] |

| Precision | Not required | Yes [1] | Yes [1] |

| Linearity | Not required | Yes [1] | Yes [1] |

| Range | Not required | Yes [1] | Yes [1] |

| LOD | Not required | Yes (for limit tests) [1] | Not required |

| LOQ | Not required | Yes (for quantification) [1] | Not required |

Application-Specific Validation Considerations

HPLC Assay Validation for Organic Compounds

Typical Acceptance Criteria:

- Accuracy: 98-102% recovery [3]

- Precision: RSD ≤ 1.5% for repeatability [3]

- Linearity: R² > 0.995 across specified range [7]

- Specificity: Baseline resolution (R ≥ 2.0) from closest eluting potential interferent

Range Considerations:

- For drug substance and product assay: 80-120% of test concentration [7]

- For content uniformity: 70-130% of test concentration [7]

- For dissolution testing: ±20% over entire specification range [7]

Impurity Method Validation for Organic Compounds

Typical Acceptance Criteria:

- Accuracy: Recovery 90-110% depending on impurity level

- LOQ: Sufficient to detect and quantify at reporting threshold (typically 0.05-0.1%)

- Precision: RSD ≤ 10% at LOQ level

- Range: From reporting level to 120% of impurity specification [7]

Essential Research Reagent Solutions for Validation Studies

Successful method validation requires high-quality materials and reagents to ensure reliable results. The following table outlines essential solutions for validating methods analyzing organic compounds.

Table 3: Essential Research Reagent Solutions for Method Validation

| Reagent/Material | Function in Validation | Quality Requirements |

|---|---|---|

| Reference Standards | Quantification and method calibration [7] | Well-characterized, known purity and stability, traceable to certified reference materials |

| Chromatography Columns | Compound separation and specificity demonstration | Multiple columns from different lots to evaluate robustness [2] |

| MS-Grade Mobile Phase Additives | Mass spectrometric detection with minimal background interference | Low UV cutoff, LC-MS compatible to prevent ion suppression [7] |

| Placebo/Blank Matrix | Specificity and selectivity assessment | Representative of sample matrix without target analytes [7] |

| Forced Degradation Reagents | Specificity evaluation under stress conditions | ACS grade or higher for controlled degradation studies |

Method Validation Lifecycle and Relationship to Broader Quality Systems

Method validation exists within a comprehensive quality framework that encompasses both quality control and quality assurance [2]. The relationship between these elements and the method validation lifecycle demonstrates how validation fits within regulated analytical environments.

The Validation Lifecycle in Practice

Modern method validation embraces a lifecycle approach as outlined in ICH Q14, recognizing that methods may require updates as manufacturing processes change or new technologies emerge [3]. This includes:

- Phase-appropriate validation with increasing rigor through development [4]

- Ongoing method monitoring during routine use to ensure continued suitability

- Method transfer between laboratories requiring demonstration of reproducibility [1]

- Revalidation when changes occur outside original scope or method parameters change significantly [1]

Analytical method validation represents a cornerstone of pharmaceutical quality systems, providing scientific evidence that analytical methods consistently produce reliable results for their intended applications [6] [2]. For researchers analyzing organic compounds, understanding validation principles and methodologies is essential for generating data that meets regulatory standards.

The evolving regulatory landscape, particularly with the implementation of ICH Q2(R2) and Q14, emphasizes science- and risk-based approaches to validation [4] [3]. This framework allows method developers to focus validation efforts on parameters most critical to method performance while building quality into methods from initial development.

As analytical technologies advance, the fundamentals of method validation remain constant: demonstrating through documented evidence that a method is suitable for its intended purpose [2]. By systematically addressing each performance characteristic with appropriate experimental protocols, researchers can ensure their analytical methods for organic compounds will withstand regulatory scrutiny while providing the data quality necessary to make informed decisions throughout the drug development process.

Analytical method validation is a fundamental process in pharmaceutical analysis and research, establishing through documented evidence that a method is consistently fit for its intended purpose [8]. It ensures that analytical results are accurate, reliable, and reproducible, providing confidence in the quality assessment of drug substances and products [9]. For researchers working with inorganic compounds, a thoroughly validated method is not merely a regulatory requirement but a scientific necessity for generating dependable chemical data [6]. Regulatory bodies including the FDA, EMA, and ICH have established strict guidelines, with ICH Q2(R1) serving as the primary international standard for validating analytical procedures [9] [10].

The selection of which validation parameters to evaluate depends on the nature of the analytical procedure. As outlined in ICH guidelines, identification tests, impurity quantitation tests, impurity limit tests, and assay tests each require different combinations of validated parameters [8]. This guide focuses on seven key parameters—accuracy, precision, specificity, LOD, LOQ, linearity, and robustness—providing researchers with comparison criteria, experimental protocols, and practical implementation strategies tailored to inorganic compounds research.

Core Parameters & Comparison Guide

Definition of Key Parameters

Accuracy refers to the closeness of agreement between the measured value obtained by the method and the true value (or an accepted reference value) [6] [10]. It indicates a method's freedom from systematic error or bias.

Precision expresses the degree of scatter between a series of measurements obtained from multiple sampling of the same homogeneous sample under prescribed conditions [6] [8]. It encompasses repeatability, intermediate precision, and reproducibility.

Specificity is the ability of a method to assess unequivocally the analyte in the presence of components that may be expected to be present, such as impurities, degradation products, or matrix components [9] [8].

Limit of Detection (LOD) is the lowest concentration of an analyte that can be reliably detected, but not necessarily quantified, under the stated experimental conditions [9] [11].

Limit of Quantitation (LOQ) is the lowest concentration of an analyte that can be quantitatively determined with suitable precision and accuracy [9] [11].

Linearity is the method's ability to elicit test results that are directly proportional to analyte concentration within a given range, or proportional by means of well-defined mathematical transformations [6] [8].

Robustness measures the capacity of a method to remain unaffected by small, deliberate variations in method parameters, providing an indication of its reliability during normal usage [9] [8].

Acceptance Criteria Comparison

Table 1: Standard Acceptance Criteria for Key Validation Parameters

| Parameter | Sub-category | Common Acceptance Criteria | Advanced Criteria (Tolerance-Based) |

|---|---|---|---|

| Accuracy | Recovery studies | 98-102% recovery for assay methods [10] | ≤10% of specification tolerance [12] |

| Precision | Repeatability | %RSD ≤ 2% for assay [9] [8] | ≤25% of specification tolerance [12] |

| Intermediate Precision | %RSD ≤ 2% for assay [8] | Similar to repeatability relative to tolerance [12] | |

| Specificity | Forced degradation | No co-elution; Peak purity passes [8] | Measurement bias ≤10% of tolerance [12] |

| Linearity | Correlation | R² ≥ 0.99 [9] [10] | No systematic pattern in residuals [12] |

| Range | Working range | Established from linearity data [6] | ≤120% of USL with demonstrated linearity/accuracy [12] |

| LOD | Signal-to-noise | S/N ≥ 3:1 [9] [11] | ≤5-10% of specification tolerance [12] |

| LOQ | Signal-to-noise | S/N ≥ 10:1 [9] [11] | ≤15-20% of specification tolerance [12] |

Table 2: Parameter Requirements by Analytical Procedure Type (Based on ICH Q2(R1))

| Parameter | Identification | Impurities Testing (Quantitative) | Impurities Testing (Limit) | Assay |

|---|---|---|---|---|

| Accuracy | - | + | - | + |

| Precision | - | + | - | + |

| Specificity | + | + | + | + |

| LOD | - | - | + | - |

| LOQ | - | + | - | - |

| Linearity | - | + | - | + |

| Range | - | + | - | + |

| Robustness | +* | +* | +* | +* |

Note: + signifies normally evaluated; - signifies not normally evaluated; + indicates should be considered throughout development [8]*

Experimental Protocols & Methodologies

Accuracy Evaluation Protocol

Experimental Design: Accuracy is typically evaluated using a recovery study, where known amounts of a reference standard of the analyte are spiked into a placebo or sample matrix [8]. For inorganic compound analysis, this might involve spiking known concentrations into a simulated matrix containing common excipients or interfering ions.

Procedure:

- Prepare a minimum of 9 determinations across a minimum of 3 concentration levels (e.g., 80%, 100%, 120% of target concentration) covering the specified range [8].

- For each level, prepare three separate samples and analyze using the method being validated.

- Include appropriate blank and placebo samples to account for matrix effects.

- Calculate the percentage recovery for each concentration using the formula: Recovery (%) = (Measured Concentration / Theoretical Concentration) × 100 [6].

Data Interpretation: The mean recovery at each level should typically fall within 98-102% for assay methods, with precision (RSD) also meeting pre-defined criteria [10]. For tolerance-based evaluation, calculate bias as a percentage of the specification tolerance (Bias% Tolerance = Bias/Tolerance × 100), with ≤10% considered acceptable for analytical methods [12].

Precision Assessment Protocol

Experimental Design: Precision is evaluated at multiple levels, with repeatability (intra-assay precision) being the most fundamental. Intermediate precision assesses variations within a laboratory (different days, analysts, equipment), while reproducibility evaluates precision between laboratories [8].

Procedure for Repeatability:

- Prepare a minimum of 6 independent sample preparations at 100% of the test concentration [8] [10].

- Analyze all preparations using the same instrument, analyst, and conditions.

- Calculate the mean, standard deviation, and relative standard deviation (%RSD) of the results.

- For chromatographic methods, multiple injections of the same preparation can assess instrument precision, but sample preparation precision requires independent preparations.

Data Interpretation: For assay methods, the %RSD for repeatability should typically be ≤ 2% [9] [8]. The Horwitz equation provides an alternative statistical approach for estimating expected precision: RSDr = 2C^-0.15, where C is the concentration expressed as a mass fraction [6]. For advanced tolerance-based evaluation, calculate Repeatability % Tolerance = (Standard Deviation × 5.15) / (USL - LSL), with ≤25% considered acceptable [12].

Specificity Demonstration Protocol

Experimental Design: For chromatographic methods, specificity is demonstrated by showing that the analyte peak is unaffected by other components and that the method can discriminate between the analyte and closely eluting compounds [9].

Procedure for Stability-Indicating Methods:

- Conduct forced degradation studies on the sample under relevant stress conditions: light, heat, humidity, acid/base hydrolysis, and oxidation [8].

- Target 5-20% degradation of the active ingredient to generate relevant degradation products [8].

- Analyze stressed samples alongside appropriate blanks, placebos, and unstressed controls.

- Demonstrate that the analyte peak is pure and free from co-eluting peaks using diode array detection (DAD) or mass spectrometry for peak purity analysis [9] [8].

Data Interpretation: For assay methods, compare results of stressed samples with unstressed controls. There should be no co-elution between the analyte and impurities, degradation products, or matrix components [8]. The peak purity should pass established thresholds. For identification methods, demonstrate 100% detection rate with established confidence limits [12].

LOD and LOQ Determination Protocol

Experimental Approaches: Multiple approaches exist for determining LOD and LOQ, with the most common being:

- Signal-to-Noise Ratio: Typically 3:1 for LOD and 10:1 for LOQ, applicable mainly to chromatographic methods [9] [11].

- Standard Deviation of Response and Slope: LOD = 3.3σ/S and LOQ = 10σ/S, where σ is the standard deviation of the response and S is the slope of the calibration curve [8] [10].

Procedure for Standard Deviation Method:

- Prepare a series of standard solutions at low concentrations near the expected detection/quantitation limits.

- Analyze each solution multiple times (minimum n=5-6) and record the instrument response.

- Calculate the standard deviation of the response (σ) from the replicates.

- Determine the slope (S) of the calibration curve in the low concentration region.

- Apply the formulas to calculate LOD and LOQ values [8].

Data Interpretation: The calculated LOD and LOQ should be appropriate for the intended method application. For impurity methods, the LOQ should be adequate to detect and quantify impurities at specification levels [8] [10]. For tolerance-based approaches, LOD should be ≤5-10% of tolerance and LOQ ≤15-20% of tolerance [12].

Linearity and Range Protocol

Experimental Design: Linearity is demonstrated across the specified range of the method, typically from 80-120% of the test concentration for assay methods, though wider ranges may be required for impurity methods [9] [8].

Procedure:

- Prepare a minimum of 5 concentrations covering the specified range (e.g., 50%, 80%, 100%, 120%, 150% of target) [6].

- Analyze each concentration in duplicate or triplicate.

- Plot the mean response against the theoretical concentration.

- Perform linear regression analysis to calculate the correlation coefficient (r), slope, y-intercept, and residual sum of squares.

Data Interpretation: The correlation coefficient (r) should typically be ≥ 0.99, though this alone is insufficient [10] [13]. Examine the residuals plot for random distribution without systematic patterns [12]. For advanced evaluation, fit studentized residuals and ensure they remain within ±1.96 limits across the range to confirm linearity [12]. The range is established as the interval where acceptable linearity, precision, and accuracy are demonstrated [6].

Robustness Testing Protocol

Experimental Design: Robustness evaluates the method's resilience to deliberate, small variations in operational parameters [9]. The experimental design should systematically vary key parameters within a realistic operating range.

Procedure for HPLC Methods:

- Identify critical method parameters: mobile phase pH (±0.2 units), mobile phase composition (organic ±2-5%), buffer concentration (±10%), column temperature (±5°C), flow rate (±10%), and detection wavelength (±2 nm) [8].

- Vary one parameter at a time while keeping others constant.

- Analyze system suitability samples and actual samples under each condition.

- Evaluate the impact on critical resolution, tailing factor, theoretical plates, and assay results.

Data Interpretation: System suitability criteria should be met under all varied conditions [8]. The results (e.g., assay values) obtained under varied conditions should be compared with those under normal conditions, typically expressed as % difference or ratio. There should be no significant deterioration in method performance when parameters are varied within the tested ranges [9] [8].

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Essential Research Reagents and Materials for Method Validation

| Category | Specific Items | Function & Importance in Validation |

|---|---|---|

| Reference Standards | Certified Reference Materials (CRMs), USP/EP Reference Standards | Provide traceable, known-concentration materials for accuracy, linearity, and precision studies [9] |

| Chromatographic Columns | Multiple C18 and specialty columns from different manufacturers/lots | Evaluate selectivity, specificity, and robustness to column variations [9] [8] |

| HPLC/Spectroscopy Solvents | HPLC-grade water, acetonitrile, methanol, buffers | Ensure minimal interference background for low LOD/LOQ; critical for mobile phase preparation [9] |

| Sample Preparation Materials | Precision pipettes, volumetric glassware, filtration units, vials | Ensure accurate and precise sample preparation; critical for precision studies [9] |

| System Suitability Materials | Test mixtures with known resolution, tailing factors | Verify system performance before validation experiments; ensures data integrity [8] |

| Stability Study Materials | Controlled temperature/humidity chambers, light cabinets | Conduct forced degradation studies for specificity demonstration [8] |

| Data Analysis Tools | CDS software with validation modules, statistical analysis packages | Automate data collection, peak integration, and statistical calculations [9] |

| 3-(4-Pentylphenyl)azetidine | 3-(4-Pentylphenyl)azetidine, MF:C14H21N, MW:203.32 g/mol | Chemical Reagent |

| ROX maleimide, 5-isomer | ROX maleimide, 5-isomer, MF:C39H36N4O6, MW:656.7 g/mol | Chemical Reagent |

Advanced Considerations for Inorganic Compounds Research

Matrix Effects in Inorganic Analysis

Inorganic compound analysis often involves complex matrices that can interfere with detection and quantification. Specificity evaluation should include testing with matrices containing common inorganic ions that might co-elute or interfere with the analyte of interest [6]. For elemental analysis, the selection of appropriate blanks is critical, particularly for endogenous analytes where an analyte-free matrix may not exist [14]. The standard addition method or use of internal standards is recommended to correct for matrix effects and recovery losses [10].

Method Comparison and Selection Criteria

When validating methods for inorganic compounds, researchers often need to select between multiple analytical techniques. The validation parameters provide critical comparison criteria:

- Techniques with high specificity (e.g., LC-MS, ICP-MS) are preferred when analyzing complex mixtures with potential interferents [9].

- Methods with lower LOD/LOQ are necessary for trace element analysis or impurity profiling [11].

- Robust methods with wide linear ranges reduce the need for frequent sample dilution and reanalysis [9].

- Precise and accurate methods minimize the risk of out-of-specification results and enhance product quality control [12].

Documentation and Regulatory Compliance

Comprehensive documentation is essential for regulatory submissions and laboratory audits [9]. The validation report should include:

- Objective and scope of the method validation

- Detailed description of chemicals, reagents, and equipment used

- Summary of methodology

- Complete validation data for all parameters, including raw data and statistical analysis

- Representative chromatograms, spectra, calibration curves, and peak purity data

- Conclusions regarding method suitability for its intended purpose [8]

Revalidation may be necessary when there are changes in the synthesis of the drug substance, composition of the product, or the analytical method itself [8]. The degree of revalidation depends on the nature of the changes, with minor changes requiring only partial revalidation and major changes necessitating full revalidation [8].

The seven key validation parameters discussed—accuracy, precision, specificity, LOD, LOQ, linearity, and robustness—form an interconnected framework that ensures analytical methods for inorganic compounds generate reliable, meaningful data. While traditional acceptance criteria provide a foundation for method validation, the emerging approach of evaluating method performance relative to product specification tolerance offers a more scientifically rigorous and risk-based framework [12].

For researchers in drug development, a thoroughly validated method is not merely a regulatory requirement but a fundamental scientific tool that supports product quality, patient safety, and efficacy. By implementing the detailed experimental protocols and comparison criteria outlined in this guide, scientists can ensure their analytical methods are truly fit-for-purpose and capable of supporting the rigorous demands of inorganic compounds research and pharmaceutical development.

Analytical method validation is a cornerstone of pharmaceutical quality assurance, ensuring that the procedures used to test drug substances and products are reliable, reproducible, and scientifically sound. For researchers working with organic compounds, demonstrating that an analytical method is fit-for-purpose is not merely a regulatory formality but a fundamental scientific requirement that directly impacts product quality and patient safety. The global regulatory landscape for method validation is primarily shaped by three key frameworks: the International Council for Harmonisation (ICH) Q2(R1) guideline, the United States Pharmacopeia (USP) general chapters, and the European Medicines Agency (EMA) requirements. While these frameworks share the common objective of ensuring data reliability, they differ in structure, emphasis, and specific requirements, creating a complex navigation challenge for drug development professionals working across international markets.

This comparison guide objectively examines the performance of these three regulatory frameworks in the context of analytical method validation for organic compounds. The analysis is structured to provide researchers with a clear understanding of each guideline's unique characteristics, enabling informed decision-making for method development, validation, and regulatory submission strategies. By synthesizing the core principles, experimental expectations, and practical implementations required by each framework, this guide serves as an essential resource for maintaining both scientific rigor and regulatory compliance in pharmaceutical research and development.

Comparative Analysis of Guideline Structures and Requirements

Core Philosophies and Regulatory Standing

The ICH, USP, and EMA frameworks approach analytical validation with distinct but complementary perspectives, each with its own regulatory standing and geographical influence:

ICH Q2(R1): As an internationally harmonized guideline, ICH Q2(R1) serves as the foundational scientific framework for analytical method validation across regulatory jurisdictions, including the United States, European Union, Japan, and Canada. Its approach is principle-based and universally applicable to various analytical techniques used for testing drug substances and products, including organic compounds. The guideline presents a structured methodology for validating the most common types of analytical procedures, focusing on defining and evaluating validation characteristics that demonstrate suitability for intended use [15] [16]. Health Canada and other regulatory authorities have formally implemented ICH guidelines, granting them official regulatory status in member regions [17].

USP Requirements: The United States Pharmacopeia embodies a compendial standard approach through its general chapters <1225> "Validation of Compendial Procedures" and <1226> "Verification of Compendial Procedures." These chapters provide detailed implementation guidance for validation parameters and acceptance criteria, particularly for methods described in USP monographs. The USP framework distinguishes between validation (for non-compendial methods) and verification (for compendial methods), offering specific guidance for both scenarios [18]. Unlike ICH, USP standards carry legal recognition in the United States under the Federal Food, Drug, and Cosmetic Act, making compliance mandatory for products marketed in the U.S.

EMA Expectations: The European Medicines Agency incorporates ICH Q2(R1) principles into the European regulatory framework but adds specific expectations through reflection papers and regional guidelines. EMA emphasizes the lifecycle approach to method validation and encourages the use of quality by design (QbD) principles. A notable EMA concept paper discusses "Transferring quality control methods validated in collaborative trials to a product/laboratory specific context," highlighting the importance of demonstrating method suitability for specific products and laboratory environments [19]. EMA's requirements have legal force within the EU member states and are particularly influential in international markets that follow European regulatory standards.

Direct Comparison of Validation Parameters

The table below provides a systematic comparison of how ICH Q2(R1), USP, and EMA address key validation parameters for analytical methods applied to organic compounds:

Table 1: Comparison of Validation Parameters Across ICH Q2(R1), USP, and EMA

| Validation Parameter | ICH Q2(R1) Approach | USP General Chapter <1225> | EMA/European Requirements |

|---|---|---|---|

| Specificity | Required with defined methodology for discrimination | Similar to ICH; additional focus on compendial applications | Aligns with ICH; increased emphasis on matrix effects |

| Accuracy | Required via spike recovery studies | Same approach as ICH | Same fundamental approach as ICH |

| Precision | Hierarchical (repeatability, intermediate precision, reproducibility) | Same hierarchical structure | Same hierarchical structure |

| Linearity | Minimum 5 concentration points | Minimum 5 points, with defined acceptance criteria | Same fundamental approach as ICH |

| Range | Defined relative to linearity results | Specifically defined for different procedure types | Same fundamental approach as ICH |

| Detection Limit (LOD) | Multiple approaches acceptable (visual, S/N, SD/slope) | Same methodological approaches | Same methodological approaches |

| Quantitation Limit (LOQ) | Multiple approaches acceptable (visual, S/N, SD/slope) | Same methodological approaches | Same methodological approaches |

| Robustness | Should be considered during development | Explicitly required with experimental design | Strongly encouraged with systematic study |

| System Suitability | Implied but not explicitly defined in validation parameters | Explicitly required with specific parameters | Expected, with alignment to Ph. Eur. requirements |

Analysis of Comparative Findings

The comparative analysis reveals both significant alignment and notable distinctions between the three frameworks:

Harmonized Core Parameters: For fundamental validation characteristics including accuracy, precision, linearity, and range, there is substantial alignment between ICH, USP, and EMA requirements. This harmonization reflects the international scientific consensus on essential validation elements, simplifying global development strategies for organic compound分æžæ–¹æ³•. The shared foundational approach reduces redundant validation studies and facilitates mutual acceptance of data across regulatory jurisdictions [15] [16].

Procedural Distinctions: The most significant differences emerge in the application of robustness testing and system suitability requirements. While ICH Q2(R1) mentions robustness as a consideration during development, USP and EMA provide more explicit expectations for experimental designs evaluating method robustness. Similarly, USP offers detailed specifications for system suitability testing, whereas ICH treats this more implicitly as part of the overall validation approach [18] [19].

Regulatory Scope and Flexibility: ICH Q2(R1) maintains a principle-based approach that applies broadly across analytical techniques, while USP provides more prescriptive guidance tailored to specific compendial methods. EMA positions itself between these approaches, embracing ICH principles while adding specific European perspectives through reflection papers and Q&As. This creates a spectrum of regulatory flexibility, with ICH offering the most adaptability for novel analytical technologies and USP providing the clearest predefined requirements for established methods [19].

Experimental Protocols for Validation Parameters

Protocol for Specificity and Selectivity

Objective: To demonstrate that the analytical procedure can unequivocally discriminate and quantify the analyte of interest from other components in organic compound samples, including impurities, degradation products, and matrix components.

Materials and Reagents:

- Reference Standard: High-purity certified reference material of the organic compound

- Test Samples: Representative batches of drug substance and product

- Forced Degradation Samples: Samples subjected to stress conditions (acid, base, oxidation, thermal, photolytic)

- Placebo/Matrix Blank: All components except the active compound

- Potential Impurities: Known synthetic intermediates and degradation products

Methodology:

- Chromatographic Separation: For HPLC methods, inject individual preparations of the analyte, potential impurities, and placebo components to establish baseline separation.

- Peak Purity Assessment: Use diode array detector (DAD) or mass spectrometry (MS) to demonstrate peak homogeneity of the analyte in stressed samples.

- Forced Degradation Studies: Expose the organic compound to various stress conditions to generate degradation products:

- Acidic Hydrolysis: 0.1N HCl at room temperature for 24 hours or mild heating

- Basic Hydrolysis: 0.1N NaOH at room temperature for 24 hours or mild heating

- Oxidative Stress: 3% Hâ‚‚Oâ‚‚ at room temperature for 24 hours

- Thermal Stress: Solid state at 105°C for 1-2 weeks

- Photolytic Stress: Exposure to UV and visible light per ICH Q1B

- Resolution Verification: Demonstrate resolution between the analyte peak and the closest eluting potential impurity exceeds 2.0.

Acceptance Criteria: The method should demonstrate no interference from placebo components at the retention time of the analyte. Peak purity tests should confirm homogeneity of the analyte peak in stressed samples. All known impurities should be baseline resolved from the analyte peak [16].

Protocol for Accuracy Evaluation

Objective: To establish the closeness of agreement between the conventional true value and the value found by the analytical method for organic compounds.

Materials and Reagents:

- Reference Standard: Certified reference material with known purity

- Placebo/Matrix Materials: Representative blank matrix

- Test Samples: Homogeneous representative sample of the organic compound

Methodology:

- Sample Preparation: Prepare a minimum of nine determinations at three concentration levels (e.g., 80%, 100%, 120% of target concentration) covering the specified range.

- Spike Recovery for Drug Products: For formulations, spike the placebo with known quantities of the analyte at the three concentration levels.

- Sample Analysis: Analyze each preparation in triplicate using the validated method.

- Calculation: Calculate recovery as (Measured Concentration/Added Concentration) × 100%.

Acceptance Criteria: Mean recovery should be within 98.0-102.0% for the drug substance at each level. For impurities, recovery should be established based on the quantification level, typically 70-130% for impurities at specification levels [16].

Protocol for Precision Assessment

Objective: To demonstrate the degree of scatter between a series of measurements from multiple sampling of the same homogeneous sample under prescribed conditions.

Materials and Reagents:

- Reference Standard: Certified reference material

- Test Sample: Homogeneous representative sample of organic compound

- Mobile Phase and Diluents: Multiple batches prepared independently

Methodology:

- Repeatability: Analyze a minimum of six determinations at 100% of test concentration by the same analyst on the same day with the same equipment.

- Intermediate Precision: Perform analyses on different days, by different analysts, using different instruments to evaluate within-laboratory variations.

- Reproducibility: If applicable, conduct collaborative studies between different laboratories (often required for standardization or method transfer).

Acceptance Criteria: For assay of drug substance, repeatability should have RSD ≤ 1.0%. Intermediate precision should show no significant difference between operators, instruments, or days based on statistical evaluation (F-test, t-test) [16].

Workflow Visualization of Method Validation Processes

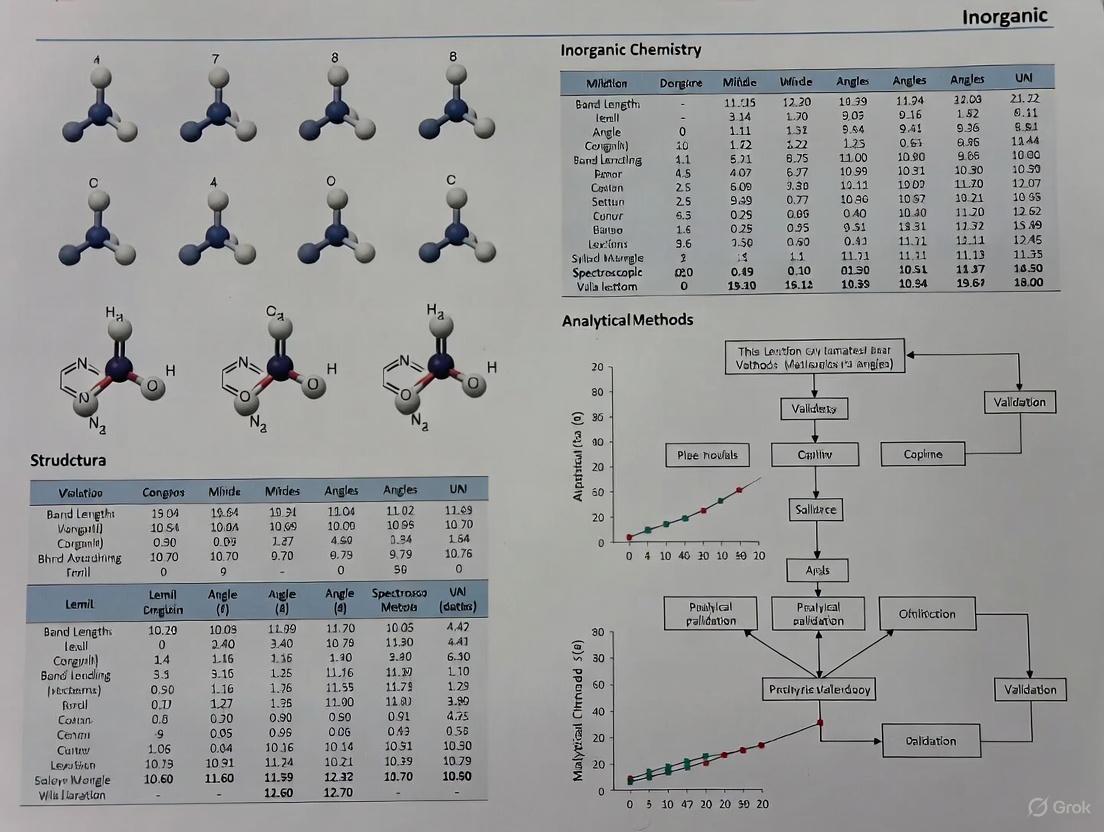

Figure 1: Analytical Method Validation Workflow for Organic Compounds

Essential Research Reagent Solutions for Validation Studies

Table 2: Essential Research Reagents for Analytical Method Validation

| Reagent/Material | Functional Role in Validation | Critical Quality Attributes |

|---|---|---|

| Certified Reference Standards | Serves as primary standard for accuracy, precision, and linearity studies | Certified purity, well-characterized structure, appropriate documentation and storage conditions |

| Chromatography Columns | Provides stationary phase for separation in specificity and robustness testing | Reproducible chemistry, appropriate selectivity for organic compounds, documented performance history |

| HPLC-Grade Solvents | Forms mobile phase components for chromatographic methods | Low UV cutoff, minimal particulate matter, controlled water content, appropriate purity grade |

| Buffer Salts and Additives | Modifies mobile phase properties to enhance separation | HPLC grade, controlled pH, minimal UV absorbance, compatible with MS detection if applicable |

| Derivatization Reagents | Enhances detection characteristics for certain organic compounds | High purity, well-documented reaction conditions, appropriate stability profile |

| System Suitability Mixtures | Verifies method performance before validation experiments | Contains all critical analytes, stable for intended use period, demonstrates key performance parameters |

The comparative analysis of ICH Q2(R1), USP, and EMA requirements for analytical method validation reveals a harmonized yet nuanced regulatory landscape for organic compound analysis. While the core scientific principles remain consistent across frameworks, strategic implementation requires careful consideration of regional emphases and specific requirements.

For global development programs targeting both U.S. and European markets, a strategic hybrid approach is recommended. This involves using ICH Q2(R1) as the foundational framework while incorporating USP's explicit system suitability requirements and EMA's emphasis on lifecycle management and robustness. Such an approach ensures compliance across jurisdictions while maximizing resource efficiency. For organic compounds specifically, early attention to specificity through forced degradation studies and comprehensive impurity separation represents a critical success factor acceptable to all regulatory bodies.

The evolving regulatory landscape, particularly with the advent of ICH Q2(R2) and its increased emphasis on analytical lifecycle management, suggests that forward-thinking laboratories should begin incorporating risk-based approaches and enhanced method robustness strategies into their current practices, regardless of the specific guideline followed. This proactive stance positions organizations for both current compliance and future regulatory expectations, ensuring the continued reliability and acceptability of analytical methods for organic compounds in an increasingly complex global market.

The Role of System Suitability and Analytical Instrument Qualification (AIQ)

In the rigorous world of pharmaceutical research and development, particularly in the analysis of inorganic compounds, the reliability of analytical data is non-negotiable. Two cornerstone processes ensure this reliability: Analytical Instrument Qualification (AIQ) and System Suitability Testing (SST). Within the framework of analytical method validation, these processes form a hierarchical relationship, ensuring that instruments are fundamentally sound and that methods perform as expected at the moment of use. A proper understanding of their distinct, complementary roles is essential for researchers, scientists, and drug development professionals to generate data that is both scientifically valid and regulatory-compliant.

The United States Pharmacopeia (USP) general chapter <1058> outlines a data quality triangle, a model that clearly defines the interdependence of key analytical processes [20]. This model establishes that AIQ forms the foundation of all analytical work [20]. Upon this qualified foundation, analytical methods are validated. Finally, system suitability tests serve as the final verification immediately before sample analysis to confirm that the validated method is performing as intended on the qualified system on a specific day [20] [21]. This structured approach is not merely a regulatory formality but represents good analytical science and provides significant business benefit by protecting the investment in analytical data [20].

Defining the Core Concepts: AIQ and SST

What is Analytical Instrument Qualification (AIQ)?

Analytical Instrument Qualification (AIQ) is the process of collecting documented evidence that an instrument performs suitably for its intended purpose [20]. It answers a fundamental question: Do you have the right system for the right job? [20] AIQ is instrument-specific and focuses on the hardware, software, and associated components of the system itself, independent of any particular analytical method [22].

The traditional model for AIQ is the 4Qs model, which breaks down the qualification process into four sequential phases [20] [23]:

- Design Qualification (DQ): The process of defining the functional and operational specifications of an instrument before purchase [20] [23].

- Installation Qualification (IQ): The documented verification that the instrument has been delivered as specified, correctly installed, and that the environment is suitable for it [20] [23].

- Operational Qualification (OQ): The documented verification that the instrument will function according to its operational specifications in the selected environment [20] [23]. This often involves testing parameters like detector wavelength accuracy, pump flow rate accuracy, and injector precision [20].

- Performance Qualification (PQ): The documented verification that the instrument consistently performs according to user-defined specifications and is fit for its intended use in the actual operating environment [20] [23].

It is critical to note that AIQ is a regulatory requirement in the pharmaceutical industry, and failure to adequately qualify equipment is a common finding in FDA warning letters [20] [22].

What is System Suitability Testing (SST)?

System Suitability Testing (SST) is a method-specific test used to verify that the analytical system (the combination of the instrument, method, and sample preparation) will perform in accordance with the criteria set forth in the procedure at the time of analysis [20] [21]. It answers the question: Is the method running on the system working as I expect today, before I commit my samples? [20]

Unlike AIQ, SST is method-specific and its parameters are derived from the requirements of the analytical procedure being run [21]. For chromatographic methods, common SST criteria include [21]:

- Precision/Repeatability: Demonstrates the injection-to-injection performance of the system, typically measured as the relative standard deviation (RSD) of replicate injections.

- Resolution (Rs): Measures how well two adjacent peaks are separated, which is critical for accurate quantitation.

- Tailing Factor (T): Assesses the symmetry of a chromatographic peak, which can affect integration accuracy.

- Signal-to-Noise Ratio (S/N): Used to verify the sensitivity of the system, particularly for impurity methods.

SST is performed each time an analysis is conducted, immediately before or in parallel with the analysis of the actual samples [21]. If an SST fails, the entire assay or run is discarded, and no results are reported other than the failure itself [21].

A Comparative Analysis: AIQ vs. SST

While AIQ and SST are both essential for data quality, they serve fundamentally different purposes. The following table provides a clear, structured comparison of their key characteristics, illustrating how they complement each other within the analytical workflow.

Table 1: Comprehensive Comparison of Analytical Instrument Qualification (AIQ) and System Suitability Testing (SST)

| Feature | Analytical Instrument Qualification (AIQ) | System Suitability Testing (SST) |

|---|---|---|

| Primary Purpose | Establish instrument is suitable for intended use [22] | Verify system performance for a specific analysis [22] |

| Focus | Instrument hardware, software, and components [22] | Analytical system (instrument, method, samples) [21] [22] |

| Nature | Instrument-specific [20] | Method-specific [20] [21] |

| Timing | Initially during installation, after major repairs, and periodically [20] [22] | Routinely, before or during each analysis [21] [22] |

| Key Parameters | Pump flow rate accuracy, detector wavelength accuracy, detector linearity, injector precision [20] | Precision (RSD), resolution, tailing factor, signal-to-noise ratio [21] |

| Basis for Parameters | Manufacturer and user specifications, pharmacopeial standards [20] [24] | Pre-defined criteria from the validated analytical method [20] [21] |

| Regulatory Status | Explicit regulatory requirement (e.g., USP <1058>) [20] [22] | Expected best practice; required by pharmacopoeias for specific methods [21] [22] |

| Consequence of Failure | Instrument taken out of service for investigation and repair [20] | Analytical run is discarded; samples are not reported [21] |

The Hierarchical Relationship and Workflow

The relationship between AIQ, method validation, and SST is not merely sequential but hierarchical. One cannot replace the other, as they control different aspects of the analytical process [20]. A common fallacy in some laboratories is the argument that "our laboratory does not need to qualify the instrument because we run SST samples and they are within limits" [20]. This is a critical error. An SST is designed to detect issues related to the method's performance on a given day, such as column degradation or mobile phase preparation errors. It is not designed to uncover fundamental instrument faults, such as a slight inaccuracy in the detector's wavelength or a minor error in the pump's flow rate [20]. These underlying instrument problems could lead to systematic errors in all results, which might go undetected by a passing SST.

The following workflow diagram illustrates the logical sequence and interdependence of these components in the analytical lifecycle.

Experimental Protocols and Verification

Protocol for HPLC Instrument Qualification

For an HPLC system used in the analysis of inorganic compounds, the OQ phase of AIQ would include testing the following key instrument functions with traceable standards and calibrated test equipment [20]:

Pump Flow Rate Accuracy and Precision:

- Methodology: The pump is set to specific flow rates (e.g., 0.5 mL/min, 1.0 mL/min, 2.0 mL/min). At each setting, the effluent is collected in a volumetric flask for a measured time. The measured volume is compared to the expected volume to determine accuracy. Precision is determined by repeating this measurement multiple times.

- Acceptance Criteria: Typically, accuracy within ±1% of the set flow rate and precision with an RSD of <0.5% [20].

Detector Wavelength Accuracy:

- Methodology: Using a holmium oxide or other certified wavelength standard solution, the detector's wavelength scale is verified by measuring the standard and comparing the observed absorbance maxima to the certified values.

- Acceptance Criteria: Deviation should be within ±1 nm for UV/Vis detectors [20].

Detector Linearity:

- Methodology: A series of standard solutions of a suitable reference material (e.g., caffeine) are analyzed across a range of concentrations. The response is plotted against concentration, and the correlation coefficient, y-intercept, and slope are calculated.

- Acceptance Criteria: A correlation coefficient (R²) of >0.999 is typically expected [20].

Autosampler Injector Precision and Carryover:

- Methodology: Precision is tested by making multiple consecutive injections of a standard solution and calculating the RSD of the peak areas. Carryover is assessed by injecting a blank solvent after a high-concentration standard and checking for any residual peak.

- Acceptance Criteria: Injection precision RSD should be <1.0% for a sufficient number of replicates. Carryover should be <0.1% [20].

Protocol for a System Suitability Test in Chromatography

For a chromatographic method quantifying an inorganic API and its related compounds, a typical SST protocol would be established during method validation and executed before each run [21]:

- Preparation: A system suitability standard is prepared, containing the analyte(s) of interest at a specified concentration, often matching the target concentration of the test samples.

- Injection: A minimum of five or six replicate injections of this standard are made according to the method [21].

- Calculation and Acceptance: The resulting chromatogram is evaluated for pre-defined parameters, for example:

- Precision: The RSD of the peak areas from the replicate injections must be ≤ 2.0% [21].

- Resolution: The resolution between the API peak and the closest eluting impurity peak must be ≥ 2.0.

- Tailing Factor: The tailing factor for the analyte peak must be ≤ 2.0.

- Theoretical Plates: The number of theoretical plates for the analyte peak must be > 2000.

The Scientist's Toolkit: Essential Research Reagent Solutions

The following table details key reagents and materials essential for performing effective AIQ and SST in an inorganic pharmaceutical analysis setting.

Table 2: Key Research Reagents and Materials for AIQ and SST

| Item | Function & Application |

|---|---|

| Certified Wavelength Standard (e.g., Holmium Oxide Solution) | Used during AIQ (OQ) to verify the wavelength accuracy of a UV/Vis or PDA detector [20]. |

| Traceably Calibrated Digital Flow Meter | Used during AIQ (OQ) to accurately measure and verify the flow rate delivered by an HPLC or UHPLC pump [20]. |

| Certified Reference Standards (Primary and Secondary) | High-purity, qualified standards used for SST and calibration. They must be from a batch different from the test samples and qualified against a former reference standard [21]. |

| System Suitability Test Mixture | A mixture of known compounds, specific to the analytical method, used to verify resolution, retention, and other chromatographic performance criteria during SST [21]. |

| Qualified HPLC/HPLC-MS Grade Solvents and Mobile Phase Additives | Essential for preparing mobile phases and sample solutions to ensure minimal background interference, stable baselines, and reproducible results in both AIQ and SST. |

| Methyltetrazine-PEG8-NH-Boc | Methyltetrazine-PEG8-NH-Boc, MF:C30H49N5O10, MW:639.7 g/mol |

| (-)-Bromocyclen | (-)-Bromocyclen|Chiral Reference Standard|RUO |

Evolving Regulatory Landscape and Future Directions

The regulatory framework governing AIQ and SST is dynamic. A significant development is the ongoing update of USP general chapter <1058>, which is proposed for a title change to Analytical Instrument and System Qualification (AISQ) [24] [23]. This update emphasizes a more integrated, lifecycle approach to qualification, moving beyond the rigid 4Qs model to a more flexible three-stage process [24] [23]:

- Specification and Selection: Defining the intended use via a User Requirements Specification (URS).

- Installation, Qualification, and Validation: Integrating instrument qualification with software validation.

- Ongoing Performance Verification (OPV): Ensuring the instrument remains in a state of control through its operational life via monitoring, calibration, and maintenance [24] [23].

This evolution aligns with the FDA Guidance for Industry on Process Validation and the Analytical Procedure Lifecycle (APL) concepts from USP <1220> and ICH Q14, promoting a holistic, scientifically sound framework for data quality [24]. For scientists, this means that the principles of AIQ and SST are becoming even more deeply embedded in the entire lifespan of an analytical procedure, from conception to retirement.

Establishing a Foundation with High-Purity Reference Materials and QC Protocols

In the field of inorganic compounds research and drug development, the veracity of analytical data is fundamentally dependent on two critical pillars: well-characterized high-purity reference materials and rigorously applied quality control (QC) protocols. Reference materials (RMs) are defined as "material, sufficiently homogeneous and stable with reference to specified properties, which has been established to be fit for its intended use in measurement or in examination of nominal properties" [25]. For quantitative analysis, this narrows to a more specific definition: a RM is a well-defined chemical, identical with the analyte to be quantified, of high and well-known purity [25].

The importance of purity assessment extends beyond mere regulatory compliance. In any biomedical and chemical context, a truthful description of chemical constitution requires coverage of both structure and purity, affecting all drug molecules regardless of development stage or source [26]. This qualification is particularly critical in discovery programs and whenever chemistry is linked with biological and/or therapeutic outcome, as trace impurities of high potency can lead to false conclusions about biological activity [26].

The Critical Role of Purity and Uncertainty in Reference Materials

Understanding Purity and Its Uncertainty

For reference materials, it is not only the purity value itself that must be known but also the uncertainty of this value. Interestingly, there is no definition of "purity" in the International Vocabulary of Metrology [25]. In practice, high purity of a chemical compound is obtained by the removal of impurities such as water, residual solvents, reaction by-products, isomeric compounds, or matrix compounds. Therefore, the content of a highly pure (reference) material is usually found by the quantitative determination of all impurities and subtracting their sum from 100% (mass/mass with solid compounds) [25].

The measurement uncertainty (MU) associated with purity values is an essential concept in modern analytical chemistry. It is a broader concept than "precision" and can illuminate the quantitative interplay of the individual working steps of a method, thus leading to a deeper understanding of its critical points [25]. However, no MU budget is complete without the uncertainty of the purity data of the RM. The situation with reference materials of pharmaceutical interest has been described as unsatisfactory, with only a limited number of high-quality RMs commercially available [25].

Classification of Impurities in High-Purity Materials

Impurities in chemical compounds can be categorized according to ICH (International Council for Harmonization) guidelines into three main types [27]:

Organic Impurities: These are frequently drug-related or process-related impurities found in chemical products and are more likely to be introduced during manufacturing, purification, or storage. They include:

- Impurities in Starting Materials

- Impurities in By-products

- Impurities in Intermediates

- Degradation Products

- Reagents, Ligands & Catalysts

- Mutagenic/Genotoxic Impurities

- Carcinogenic Impurities

- Nitrosamine Impurities

Inorganic Impurities: Typically detected and quantified using pharmacopeial standards, these include:

- Heavy Metals or other Residual Metals

- Inorganic Salts

- Reagents, Ligands & Catalysts

- Filter Aids, Charcoal & Other materials

Residual Solvents: Residuals of solvents involved in the production process that can alter material properties even at minute quantities.

Analytical Techniques for Purity Assessment

Comparison of Primary Analytical Methods

Various analytical techniques are employed for purity assessment, each with distinct principles, applications, and limitations. The table below summarizes the key techniques used for high-purity materials:

Table 1: Comparison of Analytical Techniques for Purity Assessment

| Technique | Principle | Applications in Purity Assessment | Key Advantages |

|---|---|---|---|

| Quantitative NMR (qNMR) | Measurement of NMR signal intensities proportional to number of nuclei [28] | Absolute purity determination, simultaneous structural and quantitative analysis [26] | Nearly universal detection, primary ratio method, nondestructive [26] |

| High Performance Liquid Chromatography (HPLC) | Separation based on differential partitioning between mobile and stationary phases [27] | Relative purity assessment, separation and quantification of components | High resolution, sensitive, widely available |

| Inductively Coupled Plasma Mass Spectrometry (ICP-MS) | Ionization of sample in inductively coupled plasma, mass separation [27] | Trace metal analysis, elemental impurities | Extremely low detection limits, multi-element capability |

| Gas Chromatography-Mass Spectrometry (GC-MS) | Separation by GC followed by mass spectral detection [27] | Volatile compound analysis, residual solvents | High sensitivity, definitive compound identification |

| Thin Layer Chromatography (TLC) | Affinity-based separation on adsorbent material [27] | Rapid purity screening, impurity profiling | Simple, cost-effective, minimal equipment |

The Emerging Role of qNMR in Purity Assessment

Quantitative NMR (qNMR) has emerged as a particularly powerful technique for purity assessment due to its versatility and reliability. As a primary ratio method, qNMR uses nearly universal detection and provides a versatile and orthogonal means of purity evaluation [26]. Absolute qNMR with flexible calibration captures analytes that frequently escape detection, such as water and sorbents [26].

The measurement equation for 1H-qNMR assessment of mass purity (P_PC) is represented as [28]:

Diagram 1: qNMR Purity Calculation Equation Parameters

This equation highlights that qNMR purity determination is based on ratio references of mass and signal intensity of the analyte species to that of chemical standards of known purity [28]. The method's precision stems from the direct proportionality between the amplitude of each spin component and the number of corresponding resonant nuclei [28].

Quality Control Frameworks and Protocols

The Validation Master Plan

A comprehensive Validation Master Plan (VMP) serves as the foundation for all qualification and validation activities. The VMP should include [29]:

- A general validation policy with description of intended working methodology

- Description of the facility with detailed description of critical points

- Description of the preparation process(es)

- List of production equipment to be qualified

- List of quality control equipment to be qualified

- List of other ancillary equipment, utilities, and systems

The Qualification Process: IQ, OQ, PQ

Equipment and system qualification follows a structured approach comprising three key stages:

Diagram 2: Equipment Qualification Process Workflow

Installation Qualification (IQ) provides documented verification that facilities, systems, and equipment are installed according to approved design and manufacturer's recommendations [30]. Key elements include verification of installation, component and part verification, instrument calibration, environmental and safety checks, and documentation [30].

Operational Qualification (OQ) involves documented verification that facilities, systems, and equipment perform as intended throughout anticipated operating ranges [30]. This includes test plan development, operational tests, critical parameter verification, and challenge tests [30].

Performance Qualification (PQ) provides documented verification that systems and equipment can perform effectively and reproducibly based on approved process methods and product specifications [30]. PQ typically involves testing with production materials, worst-case scenario testing, and operational range testing [30].

QC Parameters and Protocols for Analytical Instruments

Quality control for analytical instruments involves monitoring specific parameters to ensure data reliability:

Table 2: Essential QC Parameters for Analytical Instrument Qualification

| QC Parameter | Definition | Acceptance Criteria | Frequency |

|---|---|---|---|

| Accuracy | Degree of agreement with true value [31] | Percent recovery within established limits (e.g., ±10%) [31] | Each analytical run |

| Precision | Measure of reproducibility [31] | Relative percent difference or %RSD within limits | Each batch |

| Linearity | Ability to provide results proportional to analyte concentration | Correlation coefficient ≥0.995 [31] | Initial qualification and after major changes |

| Limit of Detection (LOD) | Lowest detectable concentration | Signal-to-noise ratio ≥3:1 | Initial qualification |

| Limit of Quantification (LOQ) | Lowest quantifiable concentration | Signal-to-noise ratio ≥10:1 | Initial qualification |

For specific techniques like ICP-MS, QC protocols include [31]:

- Initial Calibration: Using blank and at least five calibration standards with correlation coefficient ≥0.995

- Initial Calibration Verification (ICV): Analysis of certified solution from different source with control limits typically ±10%

- Continuing Calibration Verification (CCV): Analyzed every two hours during analytical run

- Laboratory Reagent Blank (LRB): To assess contamination from preparation process

- Matrix Spikes: To evaluate matrix effects

Experimental Protocols for Key Purity Assessment Methods

Quantitative NMR (qNMR) Protocol for Purity Determination

Principle: qNMR is based on the direct proportionality between NMR signal intensity and the number of resonant nuclei, enabling precise quantification without compound-specific calibration [28].

Materials and Reagents:

- High-purity deuterated solvent

- Certified reference standard of known purity (e.g., SRM 350b Benzoic acid)

- Analytical balance with calibration traceable to national standards

- High-field NMR spectrometer with temperature control

Procedure:

- Sample Preparation: Precisely weigh analyte and reference standard using buoyancy-corrected masses

- Solution Preparation: Dissolve in deuterated solvent to ensure complete dissolution and homogeneity

- NMR Acquisition Parameters:

- Pulse angle: 90° for quantitative conditions

- Relaxation delay: ≥5 × T1 of slowest relaxing nucleus

- Number of transients: Sufficient to achieve S/N >250 for quantitative precision

- Temperature control: ±0.1°C

- Data Processing:

- Apply appropriate window function without line broadening

- Phase correction carefully without baseline distortion

- Integrate signals with consistent limits

- Calculation: Apply measurement equation to determine purity [28]

Validation Parameters:

- Specificity: Resolution of analyte and reference standard signals

- Linearity: R ≥ 0.999 across concentration range [28]

- Precision: %RSD < 1% for replicate preparations

- Accuracy: Comparison with certified reference materials

HPLC Protocol with UV Detection for Purity Assessment

Principle: Separation based on differential partitioning between stationary and mobile phases with UV detection for quantification [27].

Materials and Reagents:

- HPLC grade mobile phase components

- Reference standard of known purity

- Appropriate HPLC column (C18, phenyl, etc., depending on application)

- HPLC system with UV/Vis detector

Procedure:

- Mobile Phase Preparation: Precisely prepare and filter mobile phase through 0.45 μm membrane

- System Equilibration: Equilibrate until stable baseline achieved

- Sample Analysis: Inject appropriate volume, monitor multiple wavelengths if necessary

- Data Analysis: Integrate peaks, calculate purity based on area percent

Validation Parameters:

- Specificity: Resolution from known impurities

- Linearity: R² > 0.995 across working range

- Precision: %RSD < 2% for replicate injections

- Accuracy: Spike recovery 98-102%

Essential Research Reagent Solutions for Purity Assessment

Table 3: Essential Research Reagents for High-Purity Analysis

| Reagent/ Material | Function | Quality Requirements | Application Notes |

|---|---|---|---|

| Certified Reference Materials (CRMs) | Calibration and method validation | Certified purity with uncertainty statement [25] | Traceable to national standards, use for definitive purity assignment |

| Deuterated NMR Solvents | qNMR analysis | High isotopic purity, minimal water content | Essential for quantitative NMR experiments [28] |

| HPLC Grade Solvents | Mobile phase preparation | Low UV cutoff, minimal particulate matter | Filter and degas before use [27] |

| Internal Standards | Quantitative analysis | High purity, chemically stable, non-reactive | Should elute separately from analyte in chromatography [28] |

| Mass Spectrometry Reference Standards | Mass calibration and system suitability | Instrument-specific certified materials | Required for accurate mass measurement |

Comparison of Purity Assessment Approaches

Orthogonality in Purity Assessment

A method used for purity assessment should be mechanistically different from the method used for the final purification step [26]. This analytical independence (orthogonality) is crucial for comprehensive purity evaluation. While chromatography is excellent for separating and quantifying related substances, it may miss structurally similar impurities or non-UV absorbing compounds. qNMR provides nearly universal detection for organic compounds but may have limitations for compounds with low H-to-C ratios [26].

Relative vs. Absolute Purity Determination

Quantitative analytical methods can be relative (100% methods) or absolute methods, yielding relative and absolute purity assignments, respectively [26]. The choice between relative and absolute methods should be congruent with the subsequent use of the material. For quantitative experiments such as determination of biological activity or chemical content, absolute purity determination is most appropriate [26].

Table 4: Comparison of Relative vs. Absolute Purity Methods

| Characteristic | Relative Methods (e.g., HPLC-UV) | Absolute Methods (e.g., qNMR) |

|---|---|---|

| Basis of Quantification | Relative response compared to main peak | Direct ratio measurement to certified standard |

| Uncertainty Sources | Relative response factors, detector linearity | Mass measurements, integration accuracy |

| Impurities Detected | Only those with detector response | Virtually all proton-containing impurities |

| Traceability | Indirect, requires certified standards | Direct, through mass and molar mass |

| Applications | Routine quality control, stability testing | Definitive purity assignment, value transfer |

Establishing a robust foundation with high-purity reference materials and comprehensive QC protocols is essential for generating reliable analytical data in inorganic compounds research and drug development. The accuracy of quantitative analysis fundamentally depends on well-characterized reference materials with known purity and uncertainty [25]. Implementing orthogonal analytical techniques, with qNMR emerging as a powerful primary method [26], provides the comprehensive approach needed for definitive purity assessment.

A systematic framework incorporating proper equipment qualification (IQ/OQ/PQ) [30], rigorous QC protocols [31], and appropriate reference materials forms the backbone of analytical method validation. This foundation ensures data integrity, facilitates regulatory compliance, and ultimately supports the development of safe and effective pharmaceutical products. As the field advances, the continued refinement of purity assessment methods and uncertainty quantification will further enhance the reliability of analytical measurements in pharmaceutical research and development.

Advanced Techniques and Real-World Applications in Inorganic Analysis

Elemental analysis is a critical component of pharmaceutical development, environmental monitoring, and industrial quality control. The accurate determination of inorganic elements and ions ensures product safety, regulatory compliance, and understanding of biological systems. Within the framework of analytical method validation for inorganic compounds research, selecting the appropriate technique is paramount for generating reliable, accurate data that meets stringent regulatory standards.

This comparison guide objectively evaluates four prominent analytical techniques: Inductively Coupled Plasma Mass Spectrometry (ICP-MS), Inductively Coupled Plasma Optical Emission Spectroscopy (ICP-OES), Ion Chromatography (IC), and Atomic Absorption (AA) Spectroscopy. Each technique offers distinct advantages and limitations in sensitivity, detection limits, application scope, and operational requirements. By examining experimental data and validation protocols, researchers and drug development professionals can make informed decisions when designing analytical strategies for specific elemental analysis challenges.

Fundamental Principles